SQS Lambda Concurrency

How do both the SQS and Lambda get implemented together for concurrency?

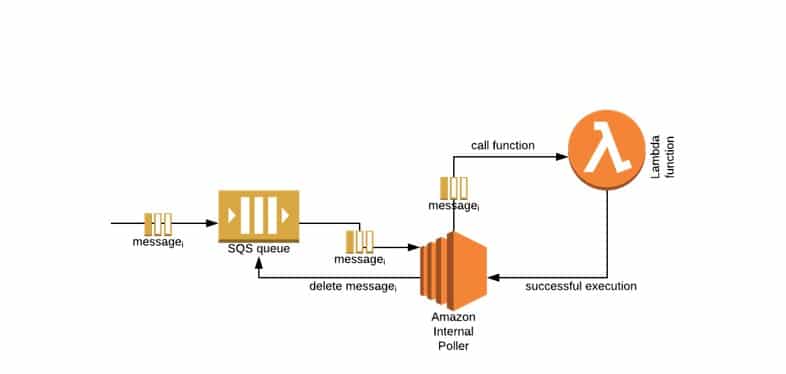

While attempting to work with SQS, you must wait for messages to get received, then get them processed and deleted from the queue. In case you forget to delete the message, it will return after the specified VisibilityTimeout since SQS believes that the processing has failed and shall make it available for consumption again, so you’re not going to lose any messages. This process will not be applied while utilizing SQS as an event source for Lambda because you won’t be touching the SQS part!

The function level concurrent execution limit will also limit you as it defaults to a shared pool of unreserved concurrency allocation being 1000/region. This may be lowered by specifying the reserved concurrent executions parameter to be a subset of the limit of your account. Nonetheless, it will subtract that number from your shared pool, thus affecting different functions! In addition, if your Lambda is VPC-enabled, this means that Amazon EC2 limits are going to be applied.

If you take your SQS up a level, you will notice the number of messages in flight starting to increase. That will be your Lambda slowly scaling out as a response to the queue size, and finally, it will hit the concurrency limit. These messages will be consumed, and synchronous invocation will be a total failure, with an exception. This is where the SQS retry policy is most useful.

What do you think is going to happen upon adding one queue as your event source for 2 distinct functions? Yep, it is going to act as a load balancer.

SQS Lambda Concurrency

Does this work?

Some tests were made undergoing the below assumptions:

- Every single message was available in the queue before the enabling of the Lambda trigger

- SQS visibility timeout = One hour

- Every single test case is performed in separate environments and at different times

- Lambda will perform nothing but merely sleep for a specific period

These were the results:

– Normal Use Cases

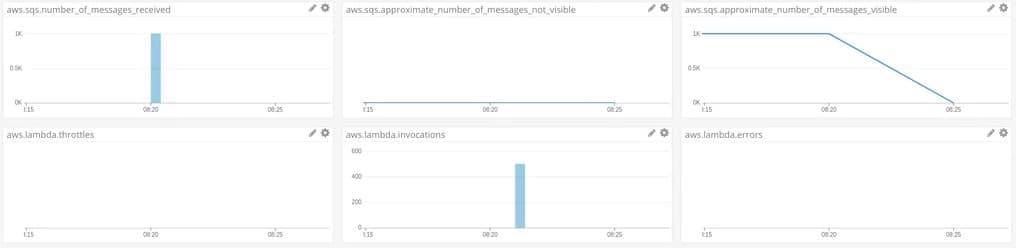

A total of 1000 messages, and sleep for three seconds:

> Nothing interesting is going to happen

> It is going to work just as well as it is expected to

> Consuming the messages quickly

> Cloudwatch is not going ahead and registering the scaling process

SQS Lambda Concurrency – normal use cases

– Normal Use Cases + Heavy Loads

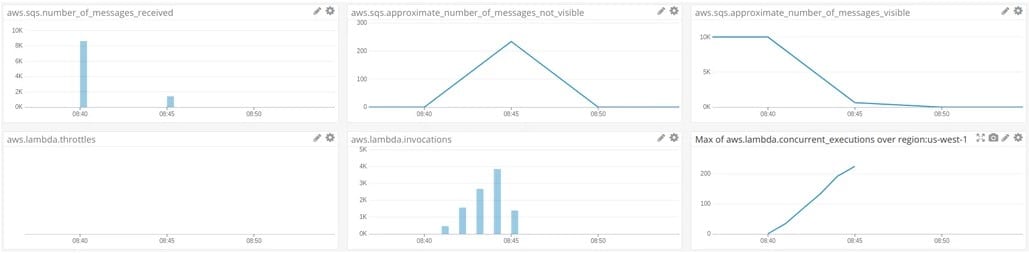

Again, we’ve got three seconds of sleep but a total of 10,000 messages instead:

> Over the concurrency limit

> Scale-out process taking more than the execution of the first Lambdas

> No throttle

> A bit longer time for consuming all of the messages

SQS Lambda Concurrency – normal use cases plus heavy loads

– Long-running lambdas

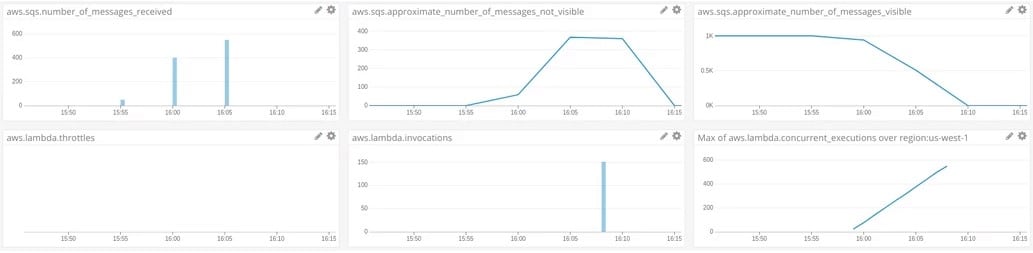

Returning to 1000 messages and having 240 seconds of sleep:

> AWS handles the scale-out process for the internal workers.

> Getting about 550 concurrent lambdas running

SQS Lambda Concurrency – long running lambdas

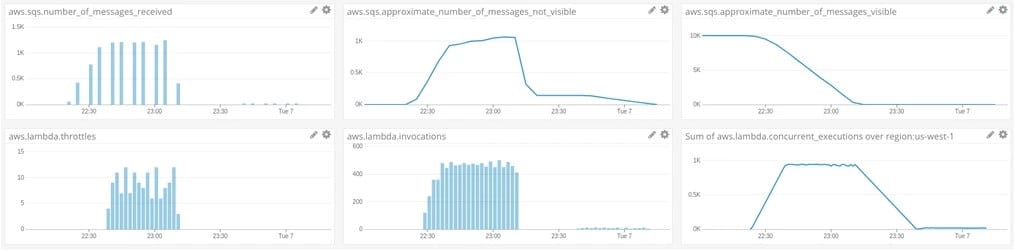

– Hitting the concurrency limit

Also, taking 240 seconds of sleep while pushing to the limit of 10000 messages, as the concurrency limit is set to 1000:

> AWS will react to the number of messages available in SQS and then go ahead with scaling internal workers to a point where the concurrency limit gets reached

> This leaves it helpless when predicting the number of Lambdas it may run, and it will, at last, start to throttle.

> Throttled Lambdas will start returning exceptions to workers signaling them to stop, but it will continue to try hoping it’s not our global limit but merely other functions taking this pool.

> AWS shall not retry function execution

> Message will be returned to the queue after the defined VisibilityTimeout

> Some invocations will be seen even after 23:30

SQS Lambda Concurrency – invocations

A similar scenario will occur while setting your very own reserved concurrency pool. Running the same test with a maximum of 50 concurrent executions will be extremely low because of the throttling.

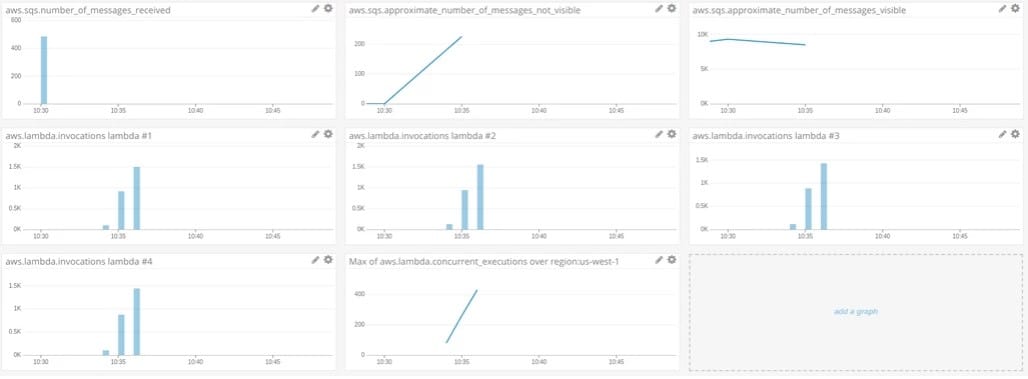

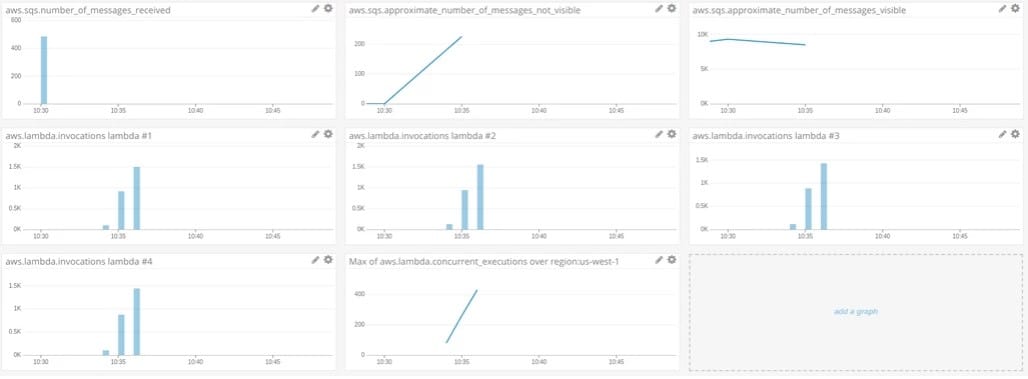

– Multiple Lambda workers

You get the chance to subscribe multiple functions to simply a single queue!

10000 messages are sent to a queue, which was set as an event source for four distinct functions:

> Every Lambda gets executed for about 2500 times

> This setup behaves similarly to a load-balancer but without the ability to subscribe Lambdas from differing regions or create a global load balancer.

SQS Lambda Concurrency – multiple lambda workers

SQS Lambda Concurrency – multiple lambda workers

SQS, as an event source for Lambda, provides you with the possibility to process messages simply without the need for containers or EC2 instances. However, remember that these are Lambda workers, which means that this solution won’t be suitable for heavy load processing since there is a limit of 5-minute timeout, along with memory constraints and the concurrency limit.

See Also