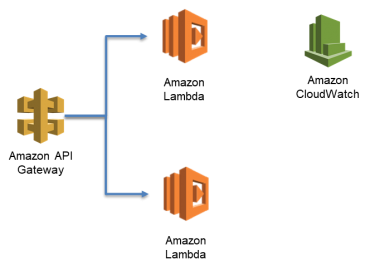

How AWS Lambda Concurrency Works?

The short definition of AWS Lambda concurrency is the number of requests served by your function at any time. Upon the invocation of your function, an instance of it will be allocated by Lambda for processing the event. As soon as the function code reaches the end of its running process, a new request may now be handled by it. In case of invoking the function once more as a request is yet getting processed, an allocation of another instance occurs, and this will end up increasing the function’s concurrency.

Subject to a Regional limit which is shared using all the functions found in one Region.

Reserved concurrency

To ensure that your function is capable of getting to a specific level of concurrency. With reserved concurrency, only your function is capable of using that concurrency. This sets a limit to the max concurrency for the function as well + It will be applied as a whole to the function, with versions and aliases also included.

Provisioned concurrency

For enabling the function to start scaling with no fluctuation in latency. Through the allocation of provisioned concurrency prior to an increase in invocations, you will be able to make sure that every single request is served with initialized instances having extremely low latency. Provisioned concurrency may be configured on a chosen version of your function, or an alias.

Lambda is also integrated with App Auto Scaling for managing provisioned concurrency on a schedule or in accordance with utilization. Scheduled scaling increases provisioned concurrency in the anticipation of some peak traffic. For the sake of increasing provisioned concurrency as required, rely on the App Auto Scaling API for registering a target and also creating a scaling policy.

Provisioned concurrency: It is what counts towards a function’s reserved concurrency and Regional limits. If the amount of provisioned concurrency on a function’s versions and aliases = the function’s reserved concurrency, this will mean that every single invocation shall be running on provisioned concurrency.

+ This configuration throttles unpublished versions of your function ($LATEST), hence preventing it from execution.

Reserved Concurrency Configuration

For managing reserved concurrency settings for your chosen function, you may utilize the Lambda console.

Reserving concurrency for a function

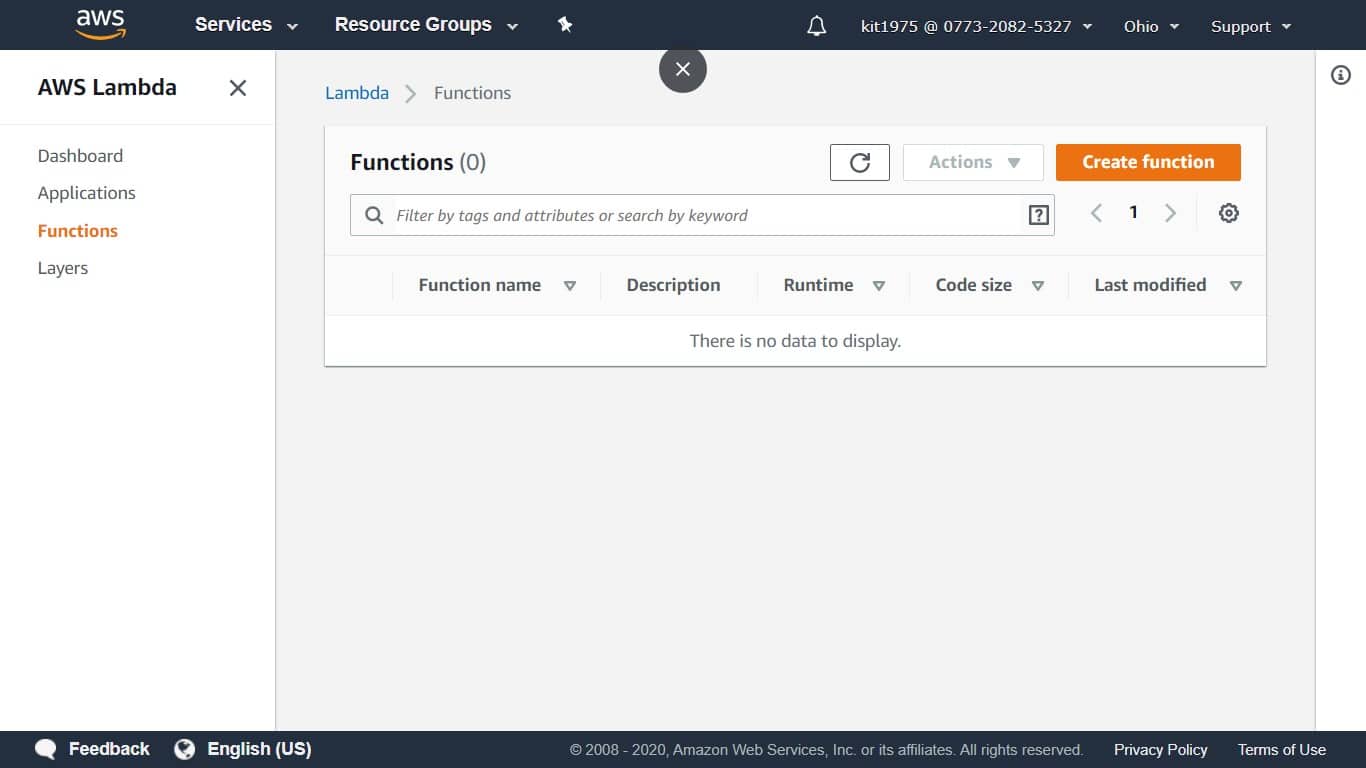

Aws Lambda Concurrency – functions

- Head to the Lambda console – Functions page.

- Select a function.

- From below Concurrency, select Reserve concurrency.

- Fill in the required amount of concurrency to get reserved for this function.

- Click on Save.

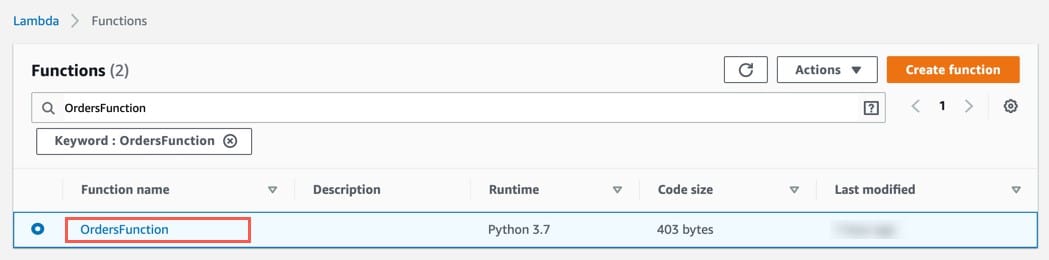

Aws Lambda Concurrency – reserved concurrency

It’s possible to reserve up to the Unreserved account concurrency value which is provided, minus one hundred for functions without reserved concurrency. For throttling a function, you need to set the reserved concurrency to a value of zero, which will stop events from getting processed till the limit is removed.

In the below example you will see 2 functions having pools of reserved concurrency, as well as the unreserved concurrency pool, which is utilized by other functions. Some throttling errors can occur as soon as all concurrency in a pool becomes in use.

The Legend

Reserving concurrency causes:

- Other functions not being able to prevent your chosen function from scaling

- Your function will no longer be able to scale out of control

Provisioned Concurrency Configuration

The Lambda console is used for managing provisioned concurrency settings for a chosen version or alias.

Reserving concurrency for an alias

- Head to the Lambda console Functions page.

Aws Lambda Concurrency – lambda functions page

- Select one of the functions.

- From below Provisioned concurrency configurations, click on Add.

- Select a specific alias or version.

- Fill in the required amount of provisioned concurrency to get allocated.

- Click on Save.

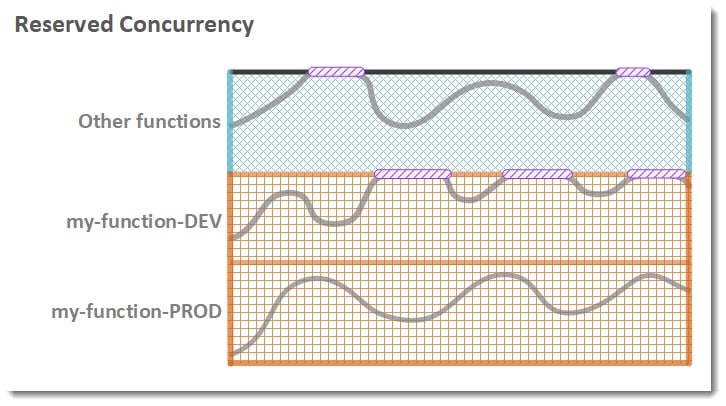

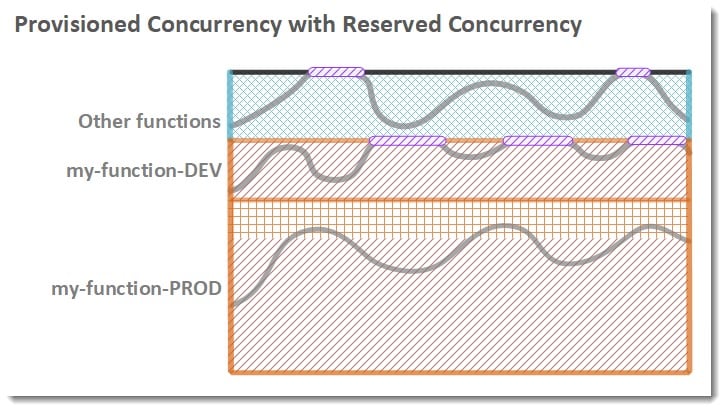

The below is an example having the my-function-DEV and my-function-PROD functions bot being configured with reserved + provisioned concurrency.

my-function-DEV: whole pool of reserved concurrency is provisioned concurrency as well. This means that every one of the invocations will be running on provisioned concurrency or will be throttled.

my-function-PROD: a specific amount of the reserved concurrency pool is standard concurrency.

As every single provisioned concurrency becomes in use, the function will then start scaling on standard concurrency in order to be able to serve extra requests.

Aws Lambda Concurrency – provisioned concurrency with reserved concurrency

The Legend

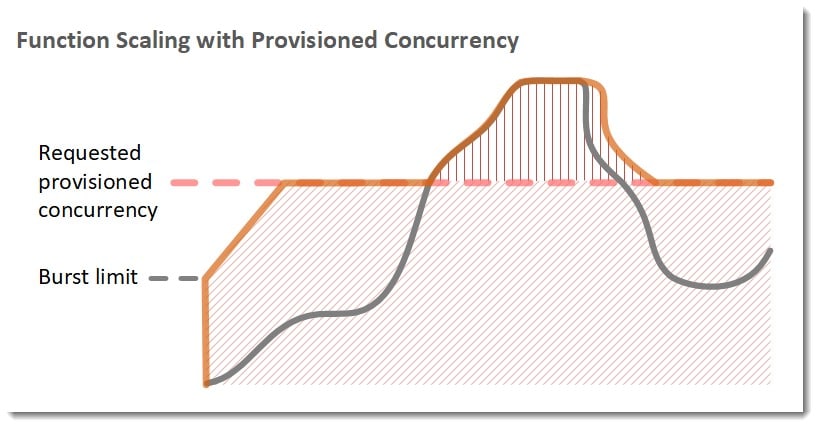

Provisioned concurrency: won’t be coming online directly upon its configuration. It starts being allocated by Lambda when a minute or two of preparation has passed. Just like the way functions tend to scale under load where a number of up to three thousand instances of the function may get initialized at the same time, being dependent on the Region. As soon as the initial burst occurs, instances will get allocated at a stable rate of five hundred every minute till the request becomes satisfied. When provisioned concurrency is requested for a various number of functions or versions of one chosen function located in the exact Region, scaling limits will get applied over every request.

Aws Lambda Concurrency – function scaled with provisioned concurrency

The Legend

The following metrics are emitted by Lambda for provisioned concurrency:

– ProvisionedConcurrentExecutions

– ProvisionedConcurrencyInvocations

– ProvisionedConcurrencySpilloverInvocations

– ProvisionedConcurrencyUtilization

Concurrency Configuration through Lambda API

For managing concurrency settings + autoscaling along with CLI or SDK, rely on the below API operations:

– PutFunctionConcurrency

– GetFunctionConcurrency

– DeleteFunctionConcurrency

– PutProvisionedConcurrencyConfig

– GetProvisionedConcurrencyConfig

– ListProvisionedConcurrencyConfigs

– DeleteProvisionedConcurrencyConfig

– GetAccountSettings

– (App Auto Scaling) RegisterScalableTarget

– (App Auto Scaling) PutScalingPolicy

For the sake of configuring reserved concurrency with CLI: put-function-concurrency command. This command will reserve a concurrency of one hundred for a function which holds the named of my-function:

$ aws lambda put-function-concurrency –function-name my-function –reserved-concurrent-executions 100

{

“ReservedConcurrentExecutions”: 100

}

For allocating provisioned concurrency for a specific function: put-provisioned-concurrency-config. This command will allocate a concurrency of one hundred for BLUE alias of one function which holds the name of my-function:

$ aws lambda put-provisioned-concurrency-config –function-name my-function \

–qualifier BLUE –provisioned-concurrent-executions 100

{

“Requested ProvisionedConcurrentExecutions”: 100,

“Allocated ProvisionedConcurrentExecutions”: 0,

“Status”: “IN_PROGRESS”,

“LastModified”: “2019-11-21T19:32:12+0000”

}

For the purpose of configuring Application Auto Scaling for managing provisioned concurrency: Application Auto Scaling [configuring target tracking scaling.

Firstly, you will need to register an alias for the function as being a scaling target. Now, the below example will register the BLUE alias of one specific function having the name my-function:

$ aws application-autoscaling register-scalable-target –service-namespace lambda \

–resource-id function:my-function:BLUE –min-capacity 1 –max-capacity 100 \

–scalable-dimension lambda:function:ProvisionedConcurrency

Then, go ahead and start applying a scaling policy to your target. The below example is a configuration for Application Auto Scaling to get the provisioned concurrency configuration adjusted for a specific alias in order to maintain utilization close to seventy percent:

$ aws application-autoscaling put-scaling-policy –service-namespace lambda \

–scalable-dimension lambda:function:ProvisionedConcurrency –resource-id function:my-function:BLUE \

–policy-name my-policy –policy-type TargetTrackingScaling \

–target-tracking-scaling-policy-configuration ‘{ “TargetValue”: 0.7, “PredefinedMetricSpecification”: { “PredefinedMetricType”: “LambdaProvisionedConcurrencyUtilization” }}’

{

“PolicyARN”: “arn:aws:autoscaling:us-east-2:123456789012:scalingPolicy:12266dbb-1524-xmpl-a64e-9a0a34b996fa:resource/lambda/function:my-function:BLUE:policyName/my-policy”,

“Alarms”: [

{

“AlarmName”: “TargetTracking-function:my-function:BLUE-AlarmHigh-aed0e274-xmpl-40fe-8cba-2e78f000c0a7”,

“AlarmARN”: “arn:aws:cloudwatch:us-east-2:123456789012:alarm:TargetTracking-function:my-function:BLUE-AlarmHigh-aed0e274-xmpl-40fe-8cba-2e78f000c0a7”

},

{

“AlarmName”: “TargetTracking-function:my-function:BLUE-AlarmLow-7e1a928e-xmpl-4d2b-8c01-782321bc6f66”,

“AlarmARN”: “arn:aws:cloudwatch:us-east-2:123456789012:alarm:TargetTracking-function:my-function:BLUE-AlarmLow-7e1a928e-xmpl-4d2b-8c01-782321bc6f66”

}

]

}

Application Auto Scaling:

Will create 2 alarms in CloudWatch.

1st alarm will be triggered upon utilization of provisioned concurrency continuously exceeding seventy percent. Then, Application Auto Scaling will start allocating extra provisioned concurrency for the sake of reducing utilization.

2nd alarm will be triggered upon utilization being continuously < 63% which means 90% of the 70% target. Then, Application Auto Scaling will start reducing the alias’s provisioned concurrency.

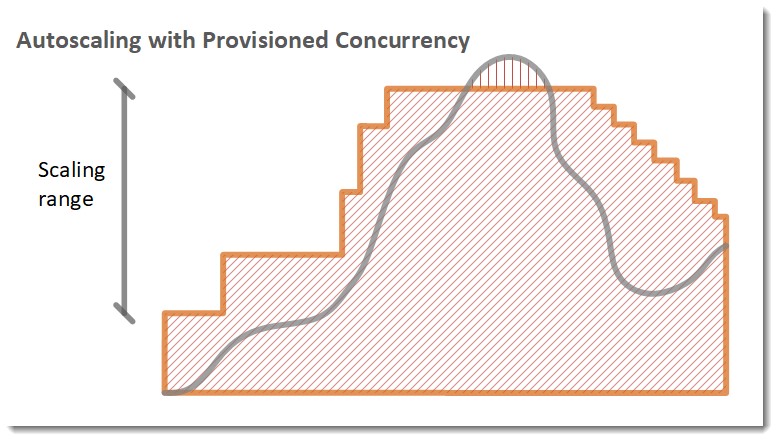

The below example shows a function scaling between a min and max number of provisioned concurrency in accordance to utilization. As the number of open requests starts to increase, Application Auto Scaling will also increase provisioned concurrency in great steps up until reaching the configured max. Function will continue scaling on standard concurrency all the way till utilization begins dropping. As utilization stays continuously low, Application Auto Scaling will decrease provisioned concurrency in little periodical steps.

Aws Lambda Concurrency – autoscaling with provisioned concurrency

The Legend

If you want to check account’s concurrency limits in a chosen Region, rely on this command: get-account-settings.

$ aws lambda get-account-settings

{

“AccountLimit”: {

“TotalCodeSize”: 80530636800,

“CodeSizeUnzipped”: 262144000,

“CodeSizeZipped”: 52428800,

“ConcurrentExecutions”: 1000,

“UnreservedConcurrentExecutions”: 900

},

“AccountUsage”: {

“TotalCodeSize”: 174913095,

“FunctionCount”: 52

}

}

See Also