How Setup S3 Inventory

This article provides a detailed overview of S3 object keys and metadata, and also highlights a few of the use cases in general.

What is S3 Inventory

- It’s a tool provided by AWS for managing & maintaining blob storage.

- While S3 inventory is a very helpful tool for compliance and regulatory needs, it won’t provide information regarding spending (or) what will be the cost estimates if a bucket lifecycle policy is applied.

- You can use CloudySave S3 Cost Calculator to evaluate your S3 costs.

- S3 Inventory can be used for auditing & reporting on the replication & encryption status of objects for the following needs: regulatory, business and compliance.

- This process simplifies and speeds up business workflows & big data jobs, by considering a scheduled operation to synchronous List API.

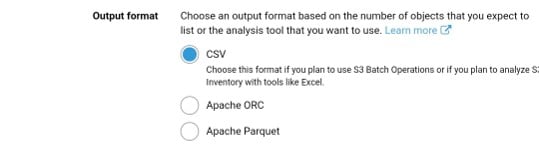

It outputs the following:

- CSVs which are values separated by comma

- Apache optimized row columnar (ORC)

- Apache Parquet (Parquet)

Output files for listing objects and their metadata can be set daily/weekly basis for entire buckets (or) shared prefix within bucket.

- The weekly report is generated every seven days after the primary report is finished.

- Multiple inventory lists can be generated for a single bucket.

Configuration Options:

- Choosing which object metadata to include-to.

- Choosing to list all object versions (or) just current/active versions.

- Choosing a destination to store inventory-list file outputs.

- Generating inventory on either a daily/weekly basis.

- Encrypting the inventory-list file is optional.

S3 inventory can be queried by standard SQL using:

- Amazon Athena is actively used for running queries on inventory files in all available regions,

- Amazon Redshift Spectrum

- Presto

- Apache Hive

- Apache Spark

Setting Up S3 Inventory?

S3 Inventory typically consists of the following:

- Source Bucket: where inventory lists the objects.

- Destination Bucket: where the inventory “list file” is stored.

Source Bucket Characteristics

Objects that are listed by the inventory get stored in this bucket. Inventory lists have the options to be made either for a whole bucket (or) just filtered by object key name.

Source bucket:

- Objects listed in the inventory

- Configuration for inventory

Destination Bucket Characteristics

This is where S3 inventory list files get updated. For grouping inventory-list files in one place of the destination bucket, an object key name can be given in the configuration.

Destination bucket:

- Stores inventory file lists.

- Manifest files can list every file in the inventory list that is stored in the destination bucket.

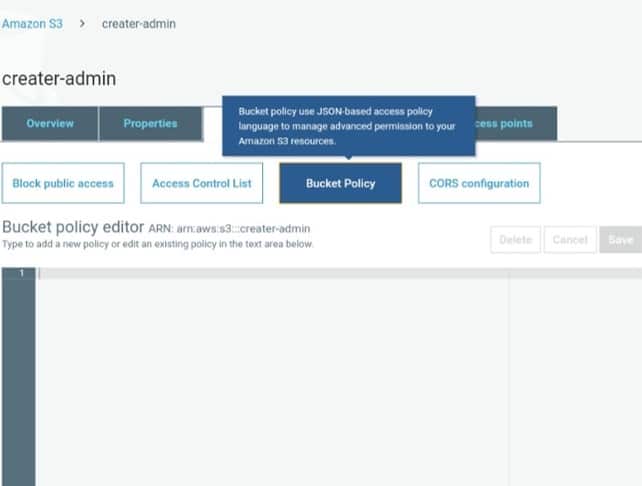

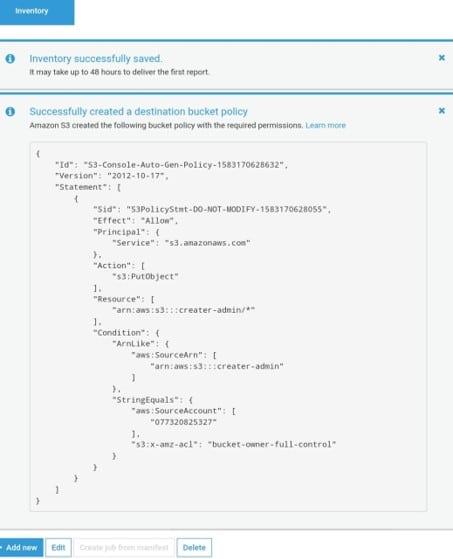

- It should have a policy that allows it to grant permission to S3 for verifying bucket ownership & permission to write files on it.

- Same Region as that of the source bucket.

- It has the ability to be identical to the source bucket.

- It can also be owned by a different AWS account.

S3 Inventory Perks:

- Managing the blob storage easily.

- Creating lists of objects found in a bucket. (with a defined schedule)

- Configuring multiple inventory lists for one bucket.

- Publishing inventory lists of a destination bucket to: ORC, CSV and Parquet files.

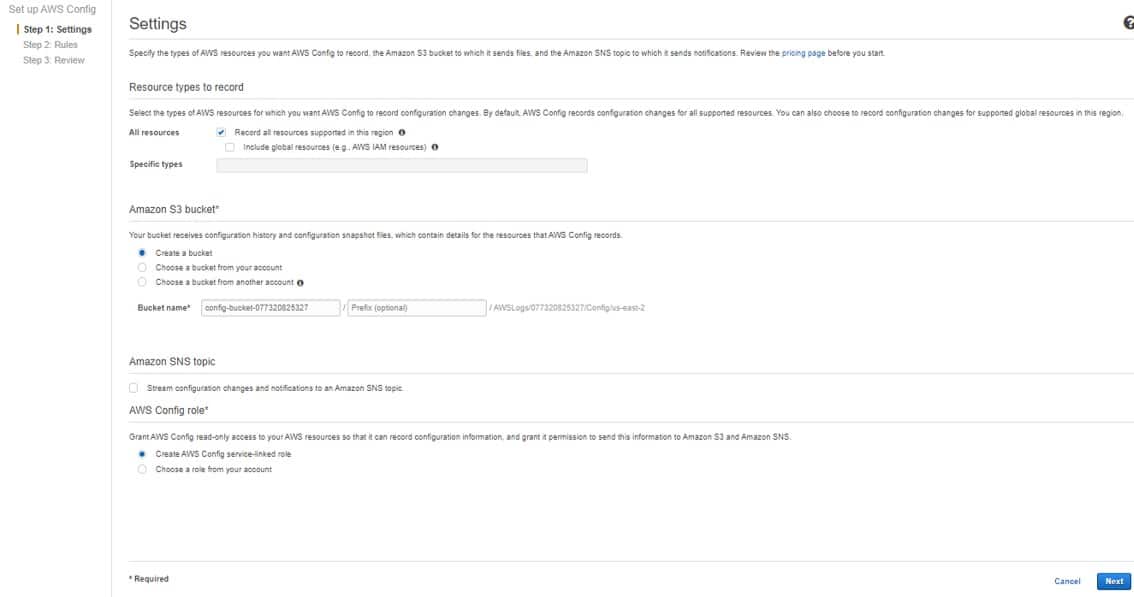

To set up an inventory:

- Via Management Console (simplest way)

- Using REST API.

- Using AWS CLI.

- Using AWS SDKs.

Adding bucket-policy to destination bucket (via console)

Create a bucket policy to give permissions to Amazon S3 for having the ability to write objects to the bucket which is found in the specified location.

Start with configuring an inventory to list objects in one source bucket and then publishes this list to a different destination bucket.

Configuring an inventory list for a source bucket you should:

- Choose a destination bucket for storing the list.

- Choose to generate the list either daily/weekly.

- Configure object metadata object to include.

- Choose listing every object version or just the current ones.

An inventory list file can be chosen to get encrypted through:

- Amazon S3 managed key (SSE-S3)

- AWS Key Management Service (AWS KMS) (Step 3 for more details)

- Customer-managed customer master key (CMK).

For configuring an inventory list through S3 API use one of the following:

- PUT Bucket inventory configuration.

- REST API

- AWS CLI

- AWS SDKs

The inventory list file is encrypted by SSE-KMS, by giving permission to the usage of CMK which is found in KMS.

Encryption for inventory list file through:

- AWS Management Console

- REST API

- AWS CLI

- AWS SDKs

Permission must be granted to Amazon S3 for using AWS KMS customer managed CMK to encrypt inventory files. This can be done by modifying key policy for customer-managed CMK used for the encryption of inventory files.

Giving S3 Permission to allow Using Your KMS CMK for Encryption purposes

- A key policy should be used to grant S3 permissions for allowing encryption.

- Customer managed AWS KMS customer master key (AWS KMS CMK).

Update key policy to use a KMS customer managed CMK for encryption of the inventory-file. Simply start with the following steps.

For giving permissions to encryption through KMS CMK

- Via the AWS account, which the customer managed CMK is owned by, login to AWS Management Console.

- Navigate to AWS KMS Console.

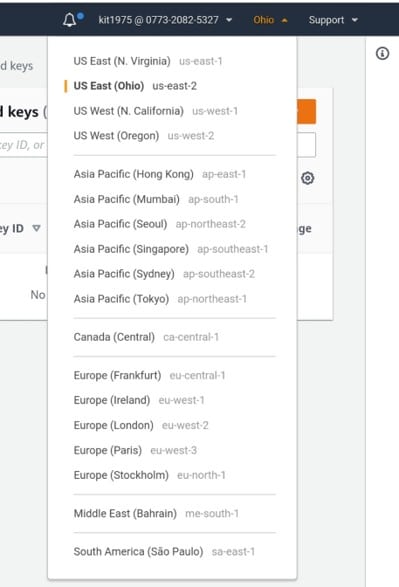

- For choosing a different region, head to the Region selector from the top right corner.

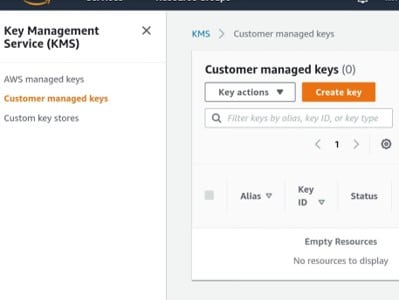

- From left navigation pane, select Customer managed keys.

- For the Customer managed keys section, select which CMK to be used for the encrypted inventory file.

- From beneath the Key policy section, select the option: Switch to policy view.

- To update key policy, click Edit.

- In the Edit key policy field, start adding the below key policy to your already existing key policy.

{ “Sid”: “Allow Amazon S3 use of the CMK”, “Effect”: “Allow”, “Principal”: { “Service”: “s3.amazonaws.com” }, “Action”: [ “kms:GenerateDataKey” ], “Resource”: “*” }

- Click on “Save changes”.

Use the KMS PUT key policy API(PutKeyPolicy) for fetching key policy copied the key policy to the CMK which is used to encrypt the inventory file.

Here are few awesome resources on AWS Services:

Upload Files/Folders to S3 bucket

CloudySave helps to improve your AWS Usage & management by providing full visibility to your DevOps & Engineers into their Cloud Usage.