AWS Load Balancer Pricing

AWS Load Balancer Pricing – How Load Balancing Works

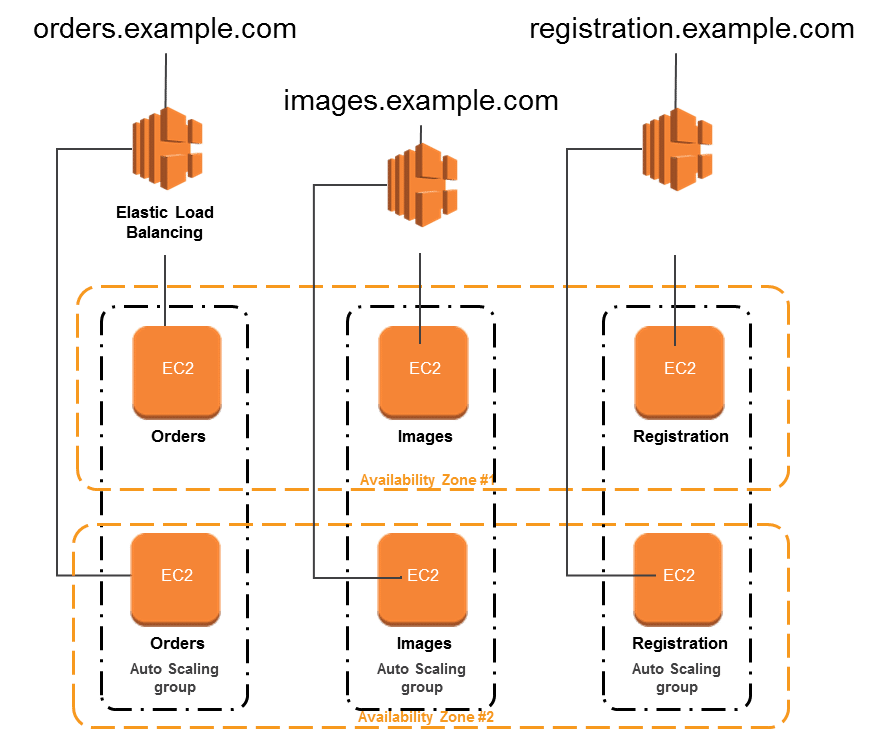

The advantages of using a load balancer will dominate whatever amount of AWS Load Balancer pricing you get charged with. With load balancers, you will get your incoming application traffic sent with elastic load balancing over various EC2 instances. The benefits are amazingly as follows:

– This will inevitably reduce your traffic.

– It offers you the necessary load balancing capacity required for distributing traffic.

– It will identify any unhealthy instances and directly start rerouting traffic to healthy ones, till the time that the unhealthy instances get restored.

– It will allow you to reach the best level of fault tolerance.

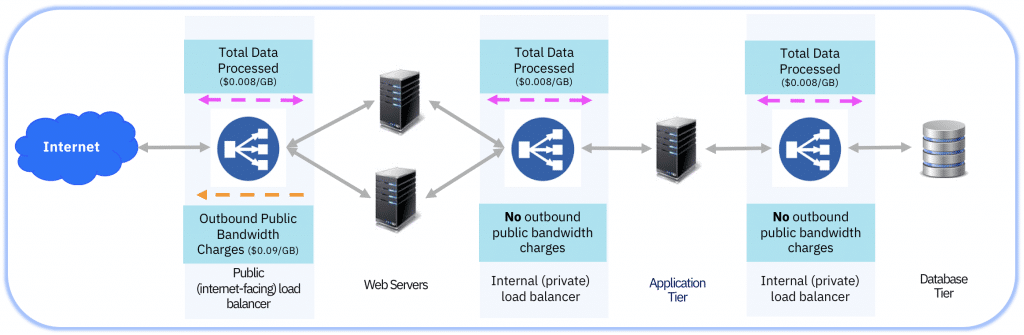

Elastic Load Balancing may be used within single or multiple AZs to ensure better app performance. It may as well be utilized in a VPC for distributing traffic between app tiers in a pre-defined virtual network.

-

AWS Load Balancer Pricing: Classic Load Balancer

AWS Load Balancer Pricing – Elastic Load Balancer

Elastic Load Balancing charges you according to your usage. It bills you for every hour or partial hour of a running elastic Load Balancer. It also charges you for every GB of data that you transfer using the elastic load balancer. When every month comes to an end, you are going to be billed for your actual usage of Elastic Load Balancing resources.

Partial hours will be charged for as regular full hours. There will be regular EC2 service fees that are separately billed.

| Region | Price per elastic load balancer-hour or partial hour | Price per GB of data processed |

| China (Ningxia) Region | ¥ 0.156 | ¥ 0.072 |

| China (Beijing) Region | ¥ 0.156 | ¥ 0.072 |

-

AWS Load Balancer Pricing: Application Load Balancer

AWS Load Balancer Pricing – Application Load Balancer

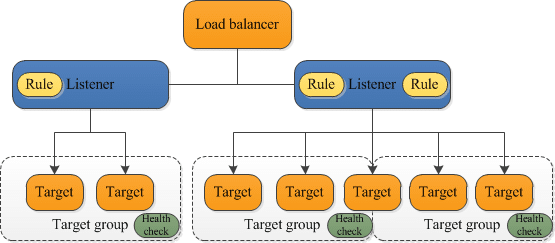

Application Load Balancers charge you according to your usage. It bills you for every hour or partial hour of a running Application Load Balancer. It also charges you for the amount of used Load Balancer Capacity Units.

| Region | Price per application load balancer-hour or partial hour | Price per LCU-hour or partial hour |

| China (Ningxia) Region | ¥ 0.156 | ¥ 0.072 |

| China (Beijing) Region | ¥ 0.156 | ¥ 0.072 |

Partial hours will be charged for as regular full hours. There will be regular EC2 service fees that are separately billed.

-

AWS Load Balancer Pricing: Network Load Balancer

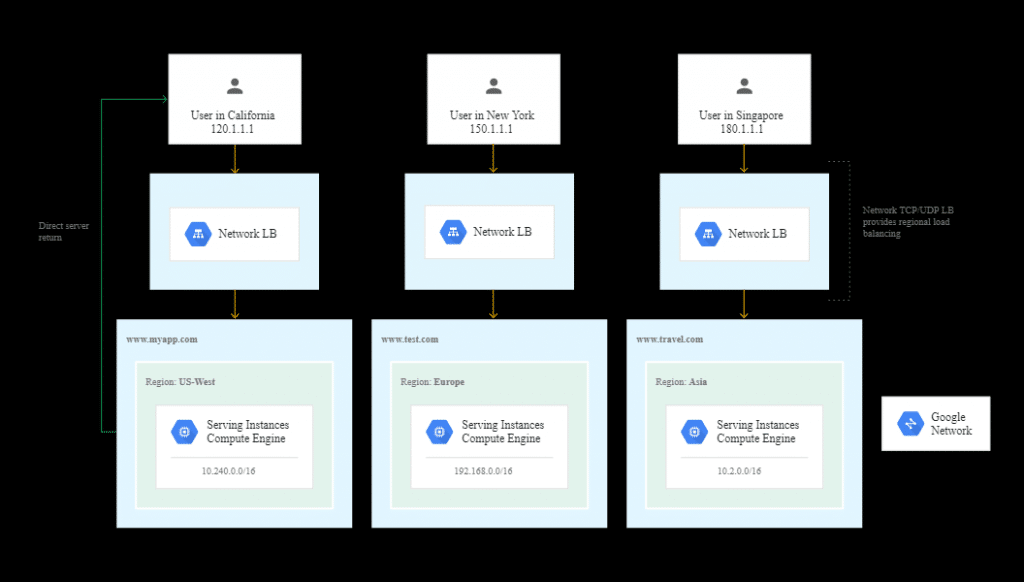

AWS Load Balancer Pricing – Network Load Balancer

Network Load Balancers charge you according to your usage. It bills you for every hour or partial hour of a running Network Load Balancer. It also charges you for the amount of used Load Balancer Capacity Units.

Partial hours will be charged for as regular full hours. There will be regular EC2 service fees that are separately billed.

| Region | Price per network load balancer-hour or partial hour | Price per LCU-hour or partial hour |

| China (Ningxia) Region | ¥ 0.156 | ¥ 0.072 |

| China (Beijing) Region | ¥ 0.156 | ¥ 0.072 |

Which type of Load Balancer offers the least expensive AWS Load Balancer Pricing?

AWS Load Balancer Pricing – Classic Vs Application Load Balancer

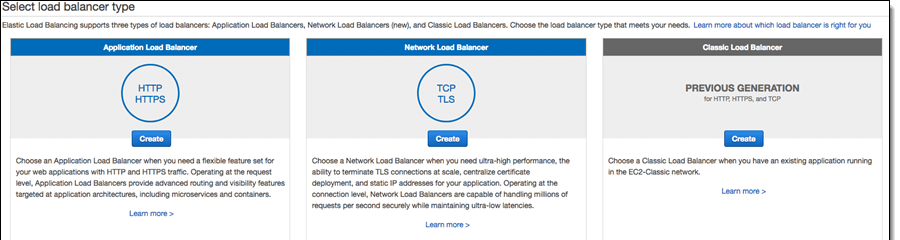

You should first know that the Application Load Balancer is similar to the classic elastic load balancer that everyone is familiar with and admire. However, it has been boosted with a strong portion of Micro Service cordial steroids making it filled with loads of exquisite features like the ones below:

– Request Tracing

– Containerized App Support

– Content-Based Routing

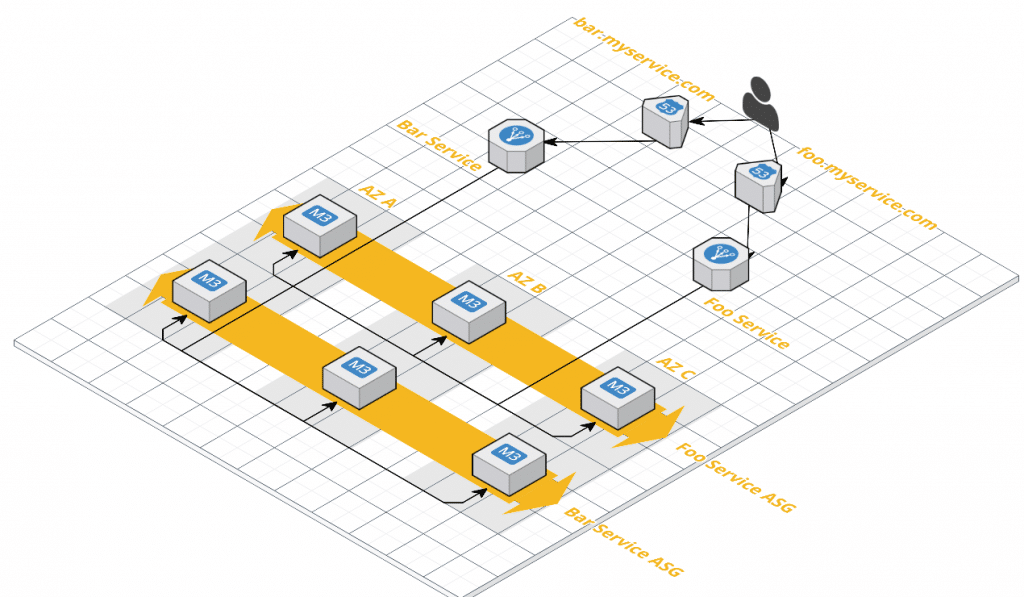

The component that is quite striking is the content-based routing. With application load balancers, you will have the simplicity and accommodation of Elastic Load Balancing while also obtaining an outstanding path-based routing solution. It’s the ideal arrangement that will make every user’s life easier.

Application load balancers are the best choice to go with. There is no need for you to keep managing extra servers and constantly worrying about whether its recovery or HA. Content routing is offered to you by AWS.

Classic Load Balancers= $0.028 / hour.

Application Load Balancers= $0.0252 / hour.

AWS Load Balancer Pricing – How Application Load Balancer Saves Money

This might not seem as such a hard thing to think about. However, the billing method of Application load balancers have been changed by AWS where a new unit, called the LCU Load Balancer Capacity Units, has been added. This LCU unit takes the highest values of the below mentioned metrics:

– Bandwidth in Mbps

– Active connections per minute

– New connections per second

Hence, as you work with load balancing, you’ll notice that the calculation will start turning somewhat complex. For example, to calculate your pricing, check the below example:

Application Load Balancer = $0.0252/h * 24 hours * 5 ALBs = $3.02 per day

Classic Load Balancer = $0.028 p/h * 24 hours * 50 ELBs = $33.60 per day

Clearly, after this calculation, you can notice that it would be much cheaper and more convenient for you to work with application load balancers.

You can go to the AWS Lightsail console to start working with load balancers and creating an AWS Lightsail Load Balancer.