AWS RDS Instance Pricing

Previous Generation DB AWS RDS Instance Pricing Details and AWS RDS AWS RDS Instance Pricing:

AWS RDS Instance Pricing – AWS RDS Instance Pricing Details

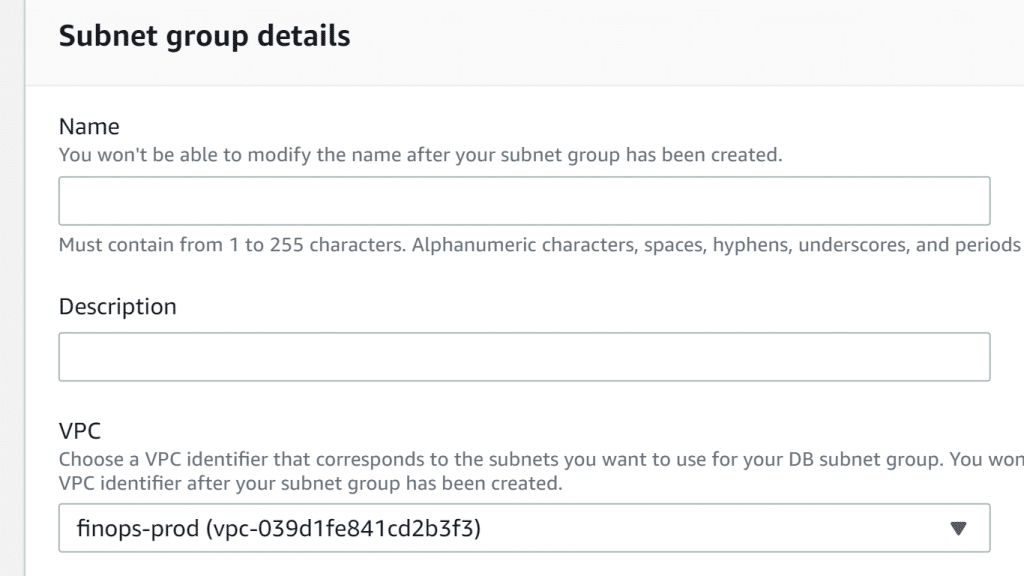

The previous generation DB AWS RDS Instance pricing paves the way to a whole world of services and features just like MySQL, PostgreSQL database engine, and Oracle while being free of commitment. Which will give you the freedom to explore those services without worrying about complex management, purchase and maintenance of your hardware. Changing expensive costs into less expensive ones, and freeing you from the strenuous job of managing relational databases. In turn, you will get the ability to give your applications and your customers all the attention that they need. To learn more about the types of RDS Instances, you check out the AWS RDS Instance Types guidlines.

| Type | vCPU | Memory | PIOPS-Optimized | Network Performance |

| Standard Pricing | ||||

| db.m1.small | 1 | 1.7 | No | Low |

| db.m1.medium | 1 | 3.75 | No | Average |

| db.m1.large | 2 | 7.5 | Yes | Average |

| db.m1.xlarge | 4 | 15 | Yes | High |

| db.m3.medium | 1 | 3.75 | No | Average |

| db.m3.large | 2 | 7.5 | No | Average |

| db.m3.xlarge | 4 | 15 | Yes | High |

| db.m3.2xlarge | 8 | 30 | Yes | High |

| Memory optimized Pricing | ||||

| db.m2.xlarge | 2 | 17.1 | No | Average |

| db.m2.2xlarge | 4 | 34.2 | Yes | Average |

| db.m2.4xlarge | 8 | 68.4 | Yes | High |

| db.r3.large | 2 | 15.25 | No | Average |

| db.r3.xlarge | 4 | 30.5 | 500 | Average |

| db.r3.2xlarge | 8 | 61 | 1,000 | High |

| db.r3.4xlarge | 16 | 122 | 2,000 | High |

| db.r3.8xlarge | 32 | 244 | No | 10 Gbps |

Previous Generation AWS RDS Instance Pricing

AWS RDS Instance Pricing – AWS RDS MySQL

- MySQL

Single-AZ Deployment

The following is a DB AWS RDS Instance Pricing of an instance being in a Single Availability Zone Deployment.

Region: US East (Ohio)

| Standard AWS RDS Instance Pricing | Hourly Fee |

| db.t2.micro | $0.017 |

| db.t2.small | $0.034 |

| db.t2.medium | $0.068 |

| db.t2.large | $0.136 |

| db.t2.xlarge | $0.272 |

| db.t2.2xlarge | $0.544 |

| db.m4.large | $0.175 |

| db.m4.xlarge | $0.35 |

| db.m4.2xlarge | $0.70 |

| db.m4.4xlarge | $1.401 |

| db.m4.10xlarge | $3.502 |

| db.m4.16xlarge | $5.60 |

| Memory Optimized AWS RDS Instance Pricing | Hourly Fee |

| db.r4.large | $0.24 |

| db.r4.xlarge | $0.48 |

| db.r4.2xlarge | $0.96 |

| db.r4.4xlarge | $1.92 |

| db.r4.8xlarge | $3.84 |

| db.r4.16xlarge | $7.68 |

| db.r3.large | $0.24 |

| db.r3.xlarge | $0.475 |

| db.r3.2xlarge | $0.945 |

| db.r3.4xlarge | $1.89 |

| db.r3.8xlarge | $3.78 |

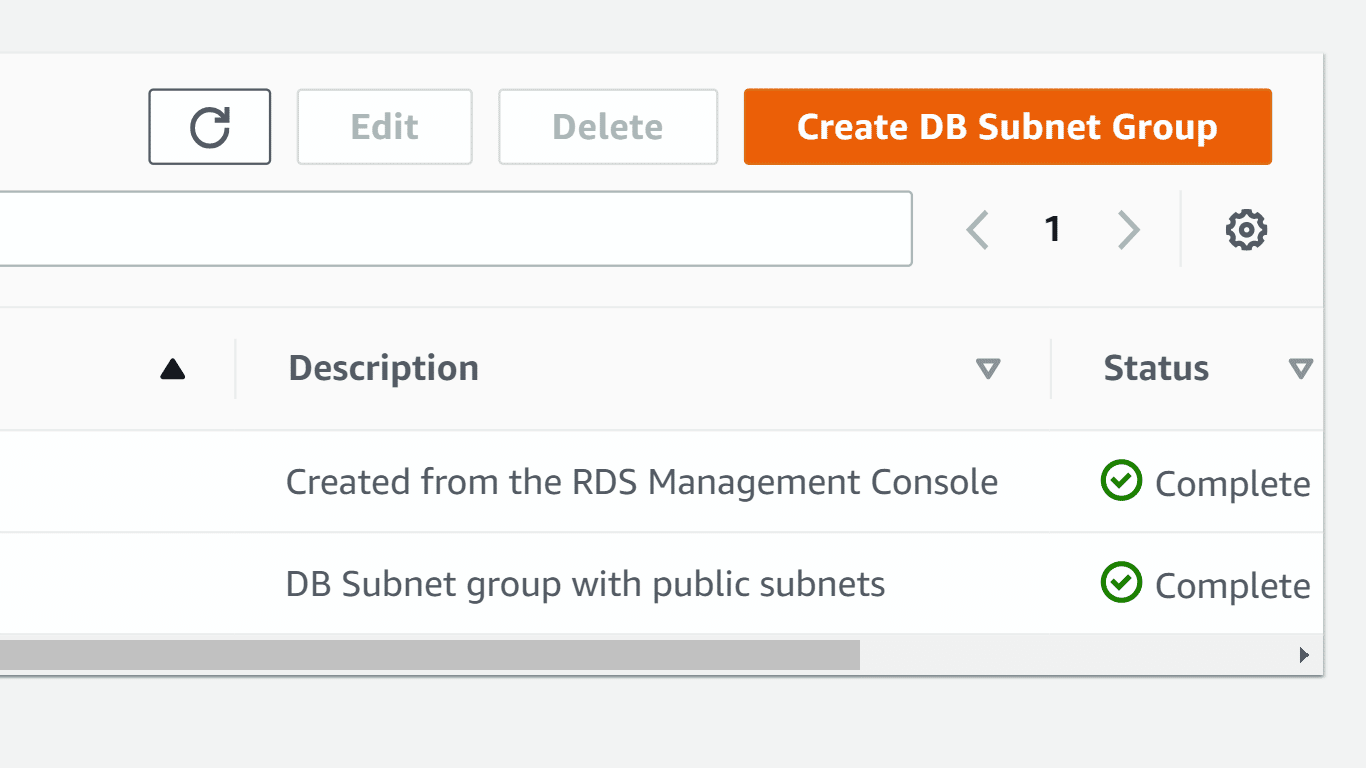

Multi-AZ Deployment

DB AWS RDS Instance Pricing for Multi-AZ deployment gives you better performance in terms of available data and its durability.

RDS sets and maintains what is a standby located another AZ to grant you direct failover whenever a scheduled or an unexpected outage occurs.

Region: US East (Ohio)

| Standard AWS RDS Instance Pricing | Hourly Fee |

| db.t2.micro | $0.034 |

| db.t2.small | $0.068 |

| db.t2.medium | $0.136 |

| db.t2.large | $0.27 |

| db.t2.xlarge | $0.544 |

| db.t2.2xlarge | $1.088 |

| db.m4.large | $0.35 |

| db.m4.xlarge | $0.70 |

| db.m4.2xlarge | $1.40 |

| db.m4.4xlarge | $2.802 |

| db.m4.10xlarge | $7.004 |

| db.m4.16xlarge | $11.20 |

| Memory Optimized AWS RDS Instance Pricing | Hourly Fee |

| db.r4.large | $0.48 |

| db.r4.xlarge | $0.96 |

| db.r4.2xlarge | $1.92 |

| db.r4.4xlarge | $3.84 |

| db.r4.8xlarge | $7.68 |

| db.r4.16xlarge | $15.36 |

| db.r3.large | $0.48 |

| db.r3.xlarge | $0.95 |

| db.r3.2xlarge | $1.89 |

| db.r3.4xlarge | $3.78 |

| db.r3.8xlarge | $7.56 |

Single-AZ as well as Multi-AZ deployments offer pricing per consumed DB AWS RDS Instance-hour. This pricing charges you from the moment an RDS Instance begins launching all the way till it gets terminated. Every partial DB AWS RDS Instance-hour being consumed gets charged for like if it were a full hour.

Reserved DB AWS RDS Instance Pricing

AWS RDS Instance Pricing – AWS RDS Reserved Instances Pricing

Amazon RDS Reserved AWS RDS Instance Pricing provide you with the ability to reserve capacity in a datacenter.

By doing so, you will get an enormous discount that will be added to your hourly charge of Instances which are set on reservation.

There are three RI payment options:

No Upfront

Partial Upfront

All Upfront

The three options allow you to balance the upfront paid amount with your effective hourly price and get a great discount over your On-Demand prices. Go to the RDS console to buy some reserved instances right now!

Single-AZ Deployment

Region: US East (Ohio)

db.t2.micro

| ONE YEAR STANDARD TERM | |||||

| Payment Option | Upfront | Monthly | Effective Hourly | Savings over On-Demand | On-Demand Hourly |

| No Upfront | $0.00 | $10.22 | $0.014 | 18% | $0.017 |

| Partial Upfront | $51.00 | $4.38 | $0.012 | 30% | |

| All Upfront | $102.00 | $0.00 | $0.012 | 32% | |

| THREE YEAR STANDARD TERM | |||||

| Payment Option | Upfront | Monthly | Effective Hourly | Savings over On-Demand | On-Demand Hourly |

| Partial Upfront | $109.00 | $2.92 | $0.008 | 52% | $0.017 |

| All Upfront | $202.00 | $0.00 | $0.008 | 55% | |

db.t2.small

| ONE YEAR STANDARD TERM | |||||

| Payment Option | Upfront | Monthly | Effective Hourly | Savings over On-Demand | On-Demand Hourly |

| No Upfront | $0.00 | $19.71 | $0.027 | 21% | $0.034 |

| Partial Upfront | $102.00 | $8.03 | $0.023 | 33% | |

| All Upfront | $195.00 | $0.00 | $0.022 | 35% | |

| THREE YEAR STANDARD TERM | |||||

| Payment Option | Upfront | Monthly | Effective Hourly | Savings over On-Demand | On-Demand Hourly |

| Partial Upfront | $218.00 | $5.84 | $0.016 | 52% | $0.034 |

| All Upfront | $403.00 | $0.00 | $0.015 | 55% | |

db.t2.medium

| ONE YEAR STANDARD TERM | |||||

| Payment Option | Upfront | Monthly | Effective Hourly | Savings over On-Demand | On-Demand Hourly |

| No Upfront | $0.00 | $39.42 | $0.054 | 21% | $0.068 |

| Partial Upfront | $204.00 | $16.79 | $0.046 | 32% | |

| All Upfront | $398.00 | $0.00 | $0.045 | 33% | |

| THREE YEAR STANDARD TERM | |||||

| Payment Option | Upfront | Monthly | Effective Hourly | Savings over On-Demand | On-Demand Hourly |

| Partial Upfront | $436.00 | $10.95 | $0.032 | 54% | $0.068 |

| All Upfront | $781.00 | $0.00 | $0.030 | 56% | |

db.t2.large

| ONE YEAR STANDARD TERM | |||||

| Payment Option | Upfront | Monthly | Effective Hourly | Savings over On-Demand | On-Demand Hourly |

| No Upfront | $0.00 | $78.84 | $0.108 | 21% | $0.136 |

| Partial Upfront | $408.00 | $33.58 | $0.093 | 32% | |

| All Upfront | $794.00 | $0.00 | $0.091 | 33% | |

| THREE YEAR STANDARD TERM | |||||

| Payment Option | Upfront | Monthly | Effective Hourly | Savings over On-Demand | On-Demand Hourly |

| Partial Upfront | $872.00 | $22.63 | $0.064 | 53% | $0.136 |

| All Upfront | $1,592.00 | $0.00 | $0.061 | 55% | |

db.t2.xlarge

| ONE YEAR STANDARD TERM | |||||

| Payment Option | Upfront | Monthly | Effective Hourly | Savings over On-Demand | On-Demand Hourly |

| No Upfront | $0.00 | $141.766 | $0.194 | 29% | $0.272 |

| Partial Upfront | $810.00 | $67.525 | $0.185 | 32% | |

| All Upfront | $1,588.00 | $0.00 | $0.181 | 33% | |

| THREE YEAR STANDARD TERM | |||||

| Payment Option | Upfront | Monthly | Effective Hourly | Savings over On-Demand | On-Demand Hourly |

| Partial Upfront | $1,680.00 | $46.647 | $0.128 | 53% | $0.272 |

| All Upfront | $3,292.00 | $0.00 | $0.125 | 54% | |

db.t2.2xlarge

| ONE YEAR STANDARD TERM | |||||

| Payment Option | Upfront | Monthly | Effective Hourly | Savings over On-Demand | On-Demand Hourly |

| No Upfront | $0.00 | $283.532 | $0.388 | 29% | $0.544 |

| Partial Upfront | $1,620.00 | $135.05 | $0.370 | 32% | |

| All Upfront | $3,176.00 | $0.00 | $0.363 | 33% | |

| THREE YEAR STANDARD TERM | |||||

| Payment Option | Upfront | Monthly | Effective Hourly | Savings over On-Demand | On-Demand Hourly |

| Partial Upfront | $3,360.00 | $93.294 | $0.256 | 53% | $0.544 |

| All Upfront | $6,585.00 | $0.00 | $0.251 | 54% | |

Multi-AZ Deployment

AWS RDS Instance Pricing – AWS RDS Instances Multi-AZ Deployment

Region: US East (Ohio)

db.t2.micro

| ONE YEAR STANDARD TERM | |||||

| Payment Option | Upfront | Monthly | Effective Hourly | Savings over On-Demand | On-Demand Hourly |

| No Upfront | $0.00 | $19.71 | $0.027 | 21% | $0.034 |

| Partial Upfront | $102.00 | $8.76 | $0.024 | 30% | |

| All Upfront | $203.00 | $0.00 | $0.023 | 32% | |

| THREE YEAR STANDARD TERM | |||||

| Payment Option | Upfront | Monthly | Effective Hourly | Savings over On-Demand | On-Demand Hourly |

| Partial Upfront | $218.00 | $5.84 | $0.016 | 52% | $0.034 |

| All Upfront | $403.00 | $0.00 | $0.015 | 55% | |

db.t2.small

| ONE YEAR STANDARD TERM | |||||

| Payment Option | Upfront | Monthly | Effective Hourly | Savings over On-Demand | On-Demand Hourly |

| No Upfront | $0.00 | $38.69 | $0.053 | 22% | $0.068 |

| Partial Upfront | $204.00 | $16.06 | $0.045 | 33% | |

| All Upfront | $389.00 | $0.00 | $0.044 | 35% | |

| THREE YEAR STANDARD TERM | |||||

| Payment Option | Upfront | Monthly | Effective Hourly | Savings over On-Demand | On-Demand Hourly |

| Partial Upfront | $436.00 | $11.68 | $0.033 | 52% | $0.068 |

| All Upfront | $806.00 | $0.00 | $0.031 | 55% | |

db.t2.medium

| ONE YEAR STANDARD TERM | |||||

| Payment Option | Upfront | Monthly | Effective Hourly | Savings over On-Demand | On-Demand Hourly |

| No Upfront | $0.00 | $79.57 | $0.109 | 20% | $0.136 |

| Partial Upfront | $408.00 | $33.58 | $0.093 | 32% | |

| All Upfront | $795.00 | $0.00 | $0.091 | 33% | |

| THREE YEAR STANDARD TERM | |||||

| Payment Option | Upfront | Monthly | Effective Hourly | Savings over On-Demand | On-Demand Hourly |

| Partial Upfront | $872.00 | $21.90 | $0.063 | 54% | $0.136 |

| All Upfront | $1,561.00 | $0.00 | $0.059 | 56% | |

db.t2.large

| ONE YEAR STANDARD TERM | |||||

| Payment Option | Upfront | Monthly | Effective Hourly | Savings over On-Demand | On-Demand Hourly |

| No Upfront | $0.00 | $157.68 | $0.216 | 20% | $0.27 |

| Partial Upfront | $816.00 | $67.16 | $0.185 | 31% | |

| All Upfront | $1,587.00 | $0.00 | $0.181 | 33% | |

| THREE YEAR STANDARD TERM | |||||

| Payment Option | Upfront | Monthly | Effective Hourly | Savings over On-Demand | On-Demand Hourly |

| Partial Upfront | $1,744.00 | $45.26 | $0.128 | 52% | $0.27 |

| All Upfront | $3,183.00 | $0.00 | $0.121 | 55% | |