Amazon S3: Create A Job

The way an S3 Batch Operations job tends to work:

-

What is an S3 Job?

It’s the basic unit of work that is used for S3 Batch Operations in AWS.

-

What does an S3 Job contain?

It includes every single information needed for the execution of a selected operation on a chosen list of objects.

-

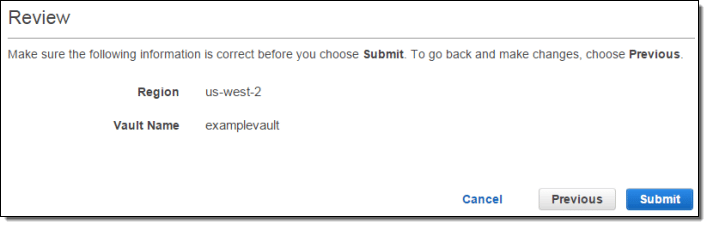

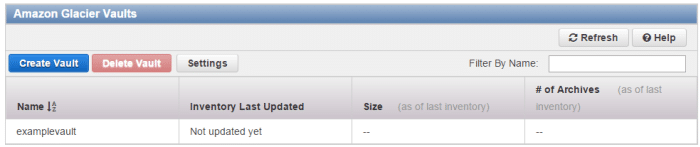

How is an S3 Job created?

You will need to supply S3 Batch Operations with a list of objects as well as setting which action is going to be performed over the selected objects.

-

Which operations does S3 Batch Operations support?

The below set of operations:

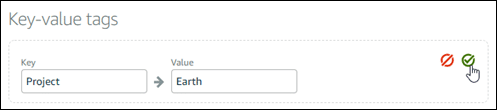

– PUT object tagging

– PUT object ACL

– PUT copy object

– Invoke an AWS Lambda function

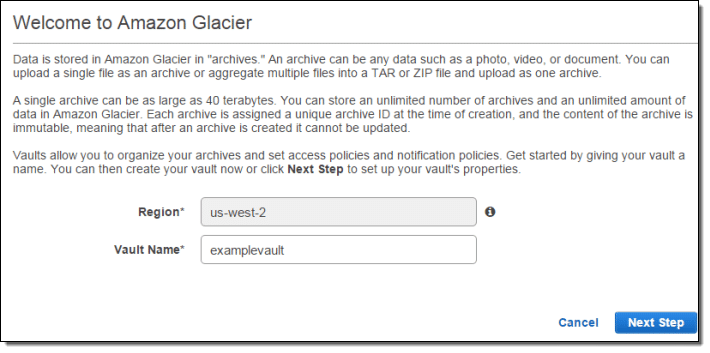

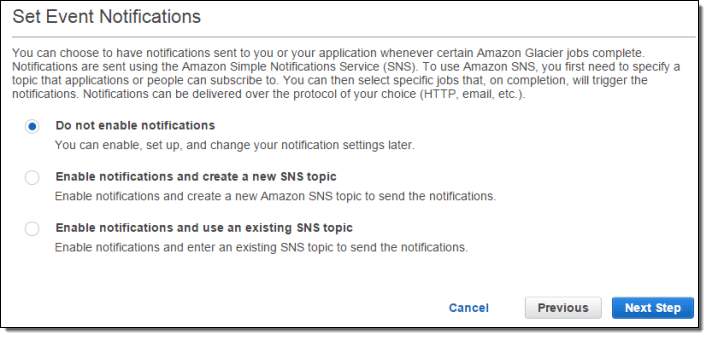

– Initiate S3 Glacier restore

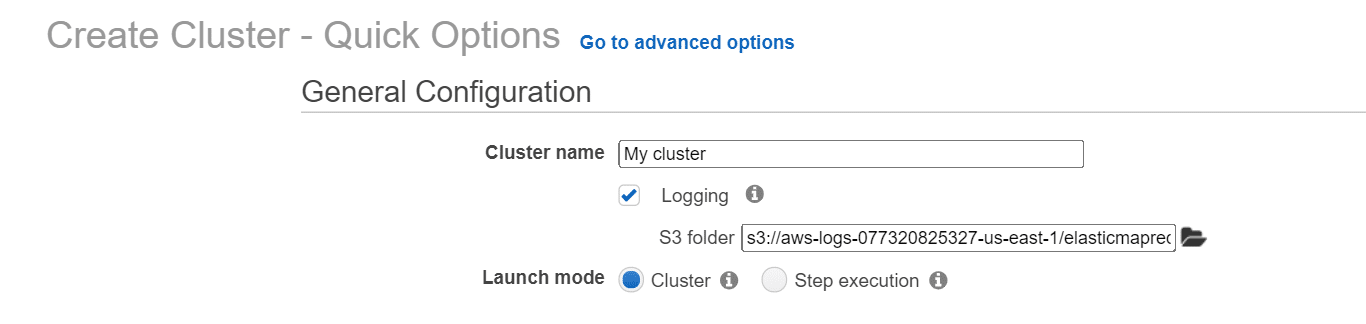

How to Create an S3 Batch Operations job?

Learn the way to get an S3 Batch Operations job created.

For the sake of creating a batch job, do the following:

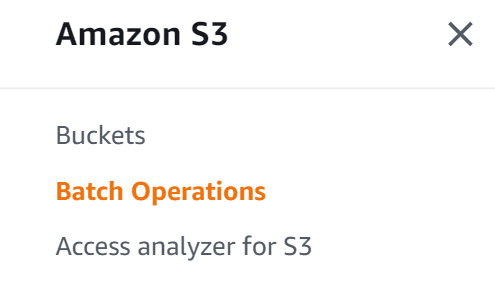

- Login to the Management Console and head straight to the S3 console using the following link https://console.aws.amazon.com/s3/.

- Select the option Batch Operations from the navigation pane of S3 console.

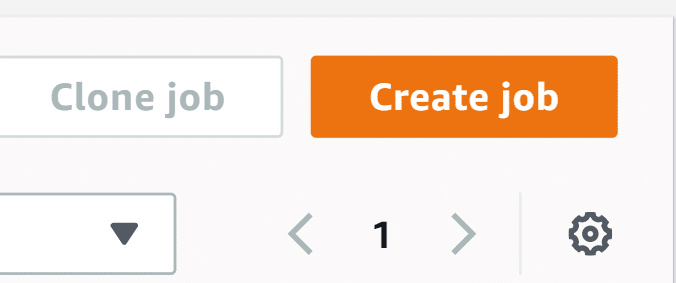

3. Click on Create job.

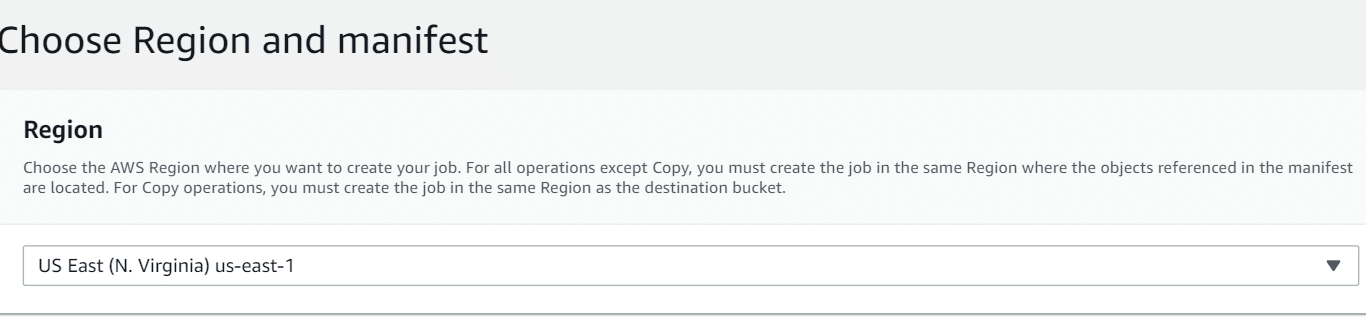

4. Select which Region you’d like to get a job created in.

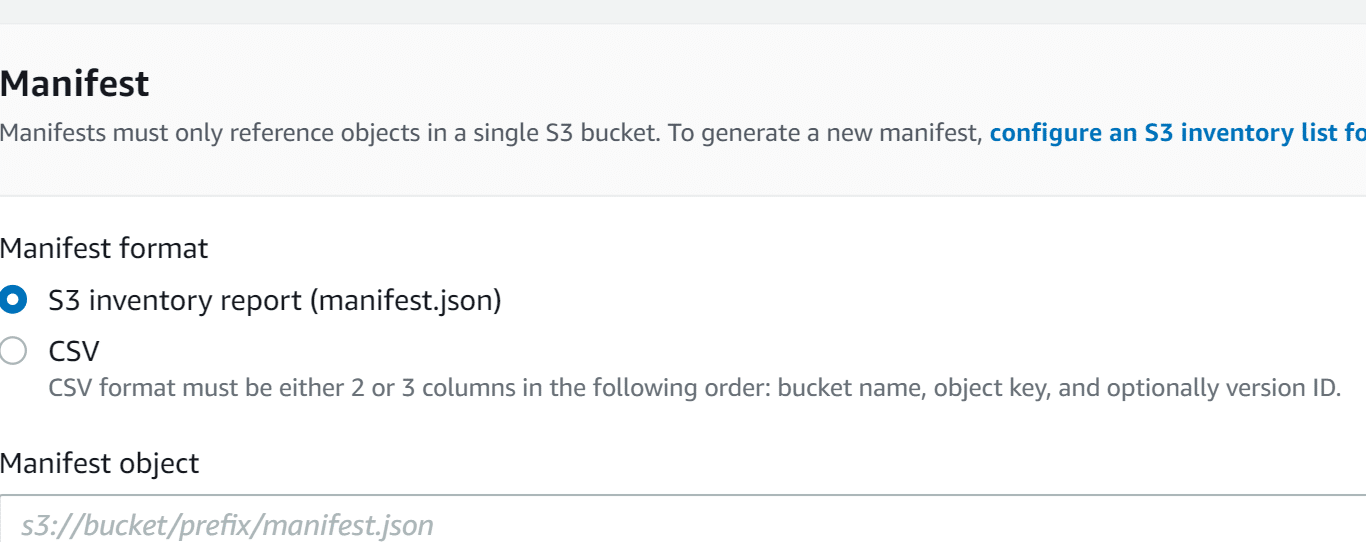

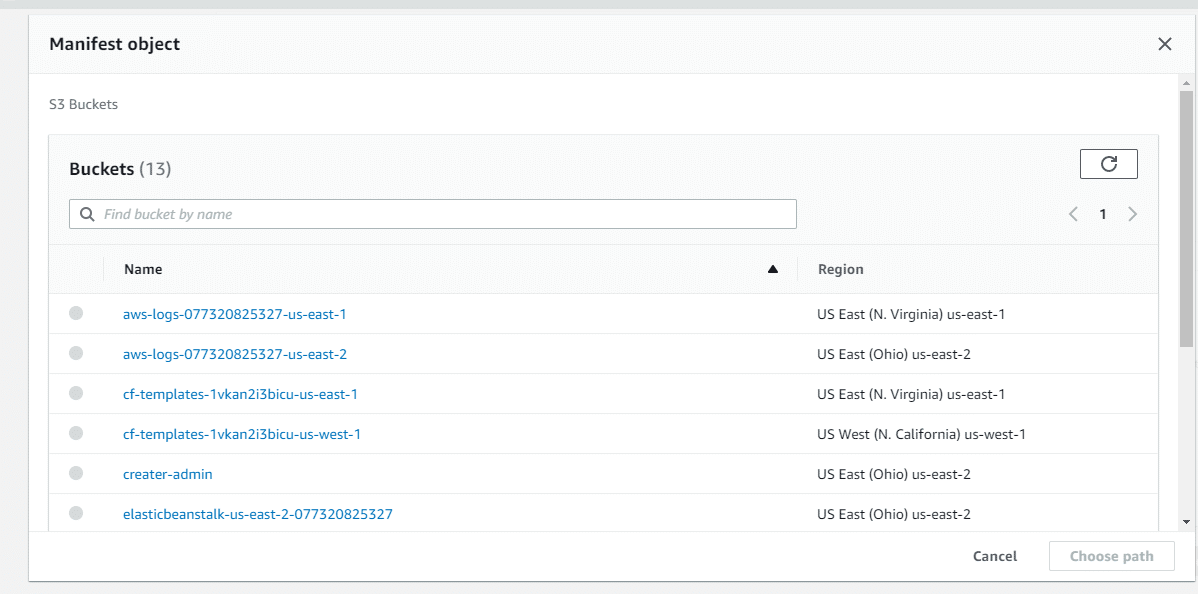

5. For the section of Manifest format, select which type of manifest object you’d like to use.

-

- In case you select the S3 inventory report, fill in the path to the manifest.json object which was generated by Amazon S3 in CSV-formatted Inventory report, and you can optionally enter the version ID for the manifest object in case you choose to use a version different than that of recent one.

In case you select the CSV, fill in the path to a CSV-formatted manifest object. The manifest object needs to be exactly as the format which was shown in the console. You are capable of optionally adding the version ID for the manifest object in case you’d like to utilize a version different than that of the recent one.

6. Click on Next.

7. For the section of Operation, select the operation which you’d like to do on every object which is shown in the manifest. Enter the necessary data for the operation which you selected then click on Next.

8. Enter the necessary data for Configure additional options then click on Next.

9. When you get to the section Review, confirm the settings. In case you’d like to do any changes, click on Previous to go back and do your alterations. If not, then click on Create Job.

How to Specify a Manifest?

You are capable of specifying a manifest in a create job request through 1 of the below listed 2 formats.

– S3 inventory report — Needs to be a CSV-formatted S3 inventory report, and you should identify the manifest.json file which comes along with your inventory report. In case the inventory report contains version IDs, then S3 Batch Operations is going to operate on the specified and chosen object versions.

– CSV file — Every single row included in the file needs to have the following fields: object key, bucket name and object version as an optional field. The object key needs to be URL-encoded, just like the ones in the below examples. The manifest should have either the version IDs for every single object or to omit version IDs for every single object.

Below you can find an example manifest in CSV format with no version IDs:

Examplebucket,objectkey1

Examplebucket,objectkey2

Examplebucket,objectkey3

Examplebucket, photos/jpgs/objectkey4Examplebucket, photos/jpgs/newjersey/objectkey5Examplebucket,object%20key%20with%20spaces

Below you can find an example manifest in CSV format with version IDs:

Examplebucket, objectkey1, PZ9ibn9D5lP6p298B7S9_ceqx1n5EJ0pExamplebucket, objectkey2,YY_ouuAJByNW1LRBfFMfxMge7XQWxMBF

Examplebucket, objectkey3,jbo9_jhdPEyB4RrmOxWS0kU0EoNrU_oIExamplebucket,photos/jpgs/objectkey4,

6EqlikJJxLTsHsnbZbSRffn24_eh5Ny4Examplebucket, photos/jpgs/newjersey/objectkey5,imHf3FAiRsvBW_EHB8GOu.NHunHO1gVsExamplebucket, object%20key%20with%20spaces,9HkPvDaZY5MVbMhn6TMn1YTb5ArQAo3w

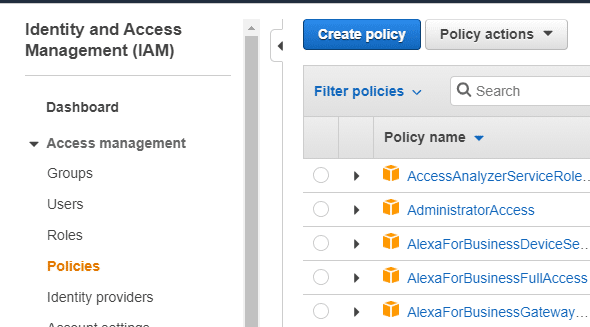

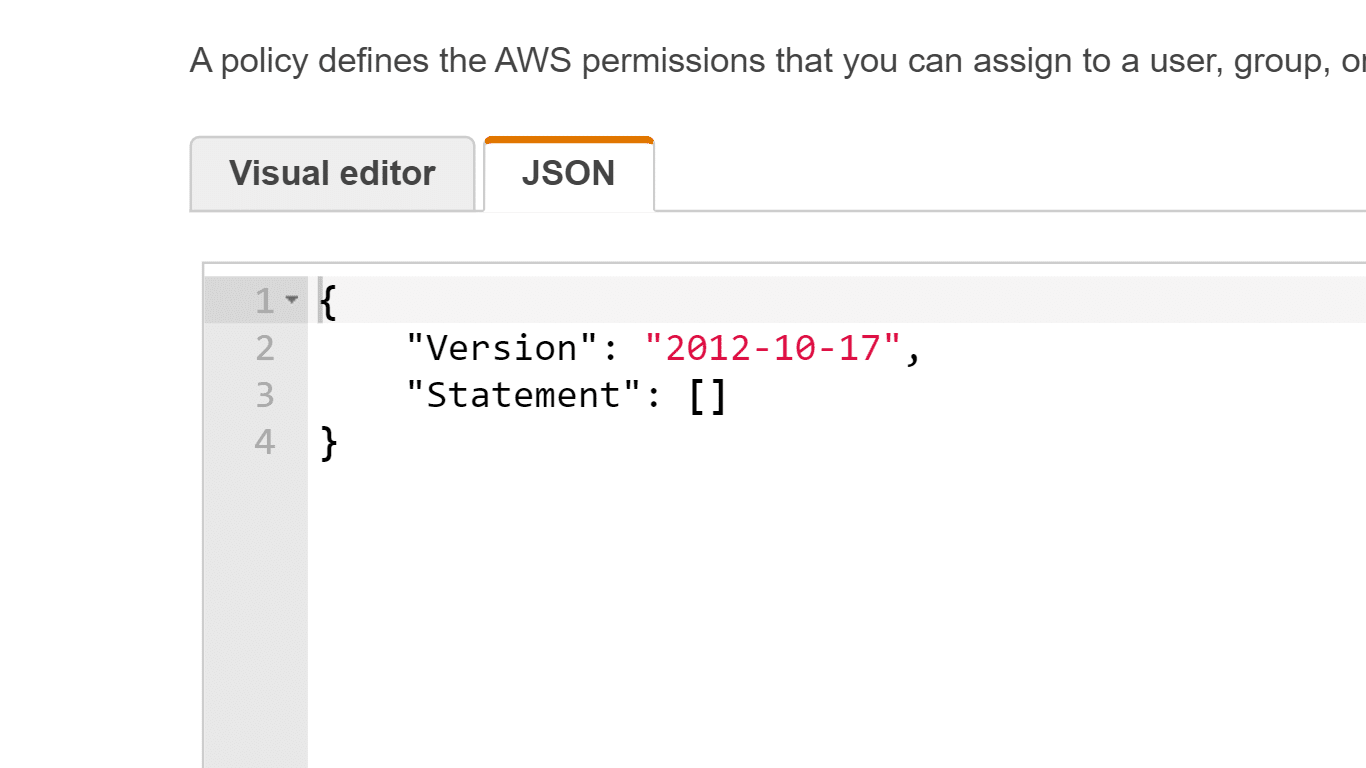

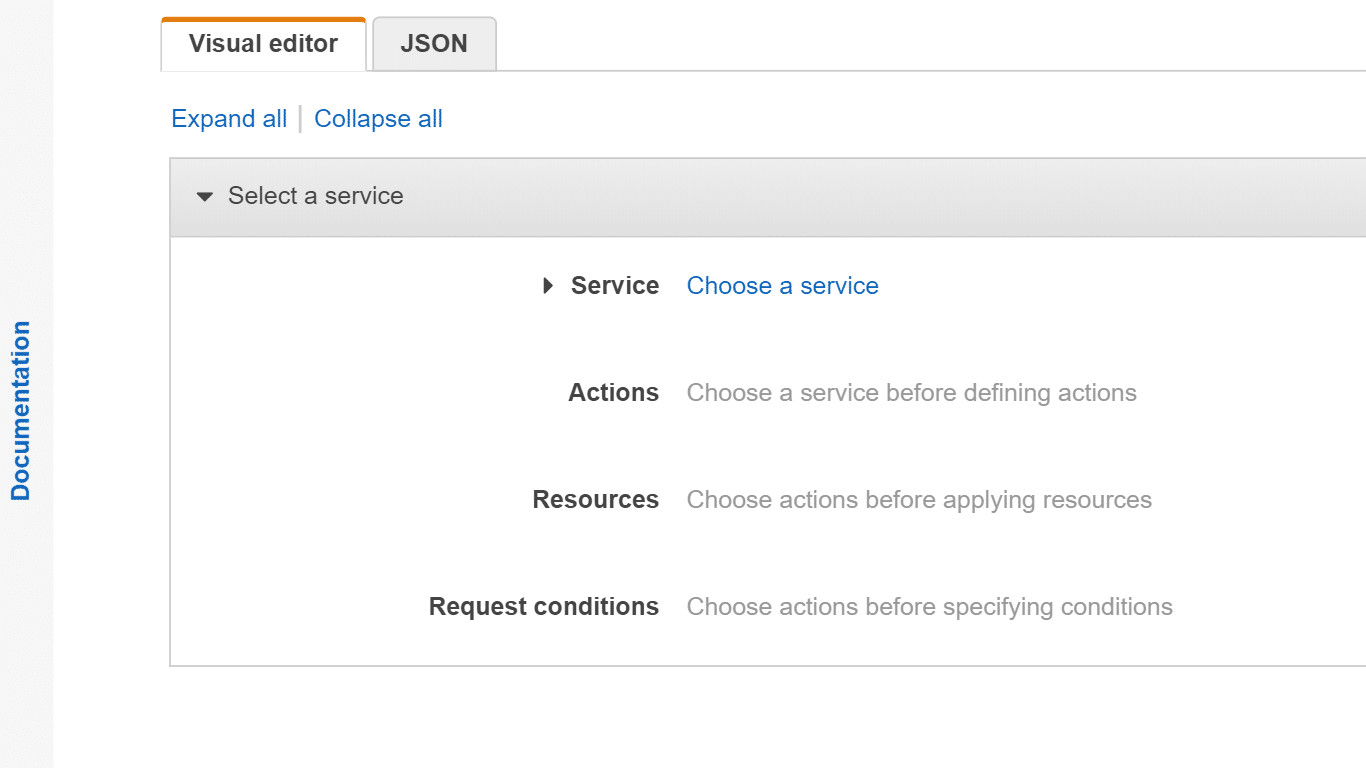

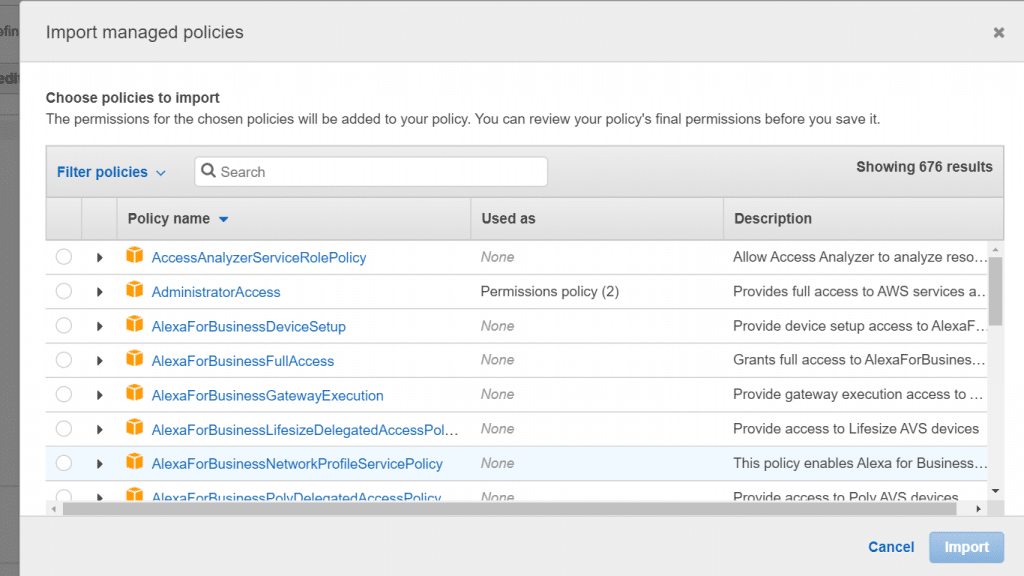

Permissions policies format:

– PUT copy object

{

"Version": "2012-10-17",

"Statement": [

{ "Action":

[ "s3:PutObject","s3:PutObjectAcl", "s3:PutObjectTagging" ],

"Effect": "Allow",

"Resource": "arn:aws:s3:::{{DestinationBucket}}

/*"

},{ "Action":

[ "s3:GetObject",

"s3:GetObjectAcl",

"s3:GetObjectTagging"

],

"Effect": "Allow",

"Resource": "arn:aws:s3:::{{SourceBucket}}

/*" },

{

"Effect": "Allow",

"Action":

[

"s3:GetObject",

"s3:GetObjectVersion",

"s3:GetBucketLocation"

],

"Resource":

[

"arn:aws:s3:::{{ManifestBucket}}/*"

] },

{

"Effect": "Allow",

"Action":

[

"s3:PutObject",

"s3:GetBucketLocation"

],

"Resource":

[

"arn:aws:s3:::{{ReportBucket}}/*"

]

}

]}

– PUT object tagging

{

"Version":"2012-10-17", "Statement":

[ { "Effect":"Allow",

"Action":

[ "s3:PutObjectTagging", "s3:PutObjectVersionTagging" ],

"Resource": "arn:aws:s3:::{{TargetResource}}/*" },

{ "Effect": "Allow",

"Action":

[ "s3:GetObject", "s3:GetObjectVersion", "s3:GetBucketLocation" ],

"Resource":

[ "arn:aws:s3:::{{ManifestBucket}}/*" ] }, { "Effect":"Allow",

"Action":

[ "s3:PutObject", "s3:GetBucketLocation" ], "Resource":

[ "arn:aws:s3:::{{ReportBucket}}/*" ]

}

]}

– PUT object ACL

{ “Version”:”2012-10-17″, “Statement”:[ { “Effect”:”Allow”, “Action”:[ “s3:PutObjectAcl”, “s3:PutObjectVersionAcl” ], “Resource”: “arn:aws:s3:::{{TargetResource}}/*” }, { “Effect”: “Allow”, “Action”: [ “s3:GetObject”, “s3:GetObjectVersion”, “s3:GetBucketLocation” ], “Resource”: [ “arn:aws:s3:::{{ManifestBucket}}/*” ] }, { “Effect”:”Allow”, “Action”:[ “s3:PutObject”, “s3:GetBucketLocation” ], “Resource”:[ “arn:aws:s3:::{{ReportBucket}}/*” ] } ]}

– Initiate S3 Glacier restore

{ “Version”:”2012-10-17″, “Statement”:[ { “Effect”:”Allow”, “Action”:[ “s3:RestoreObject” ], “Resource”: “arn:aws:s3:::{{TargetResource}}/*” }, { “Effect”: “Allow”, “Action”: [ “s3:GetObject”, “s3:GetObjectVersion”, “s3:GetBucketLocation” ], “Resource”: [ “arn:aws:s3:::{{ManifestBucket}}/*” ] }, { “Effect”:”Allow”, “Action”:[ “s3:PutObject”, “s3:GetBucketLocation” ], “Resource”:[ “arn:aws:s3:::{{ReportBucket}}/*” ] } ]}

– PUT S3 Object Lock retention

{ “Version”: “2012-10-17”, “Statement”: [ { “Effect”: “Allow”, “Action”: “s3:GetBucketObjectLockConfiguration”, “Resource”: [ “arn:aws:s3:::{{TargetResource}}” ] }, { “Effect”: “Allow”, “Action”: [ “s3:PutObjectRetention”, “s3:BypassGovernanceRetention” ], “Resource”: [ “arn:aws:s3:::{{TargetResource}}/*” ] }, { “Effect”: “Allow”, “Action”: [ “s3:GetObject”, “s3:GetObjectVersion”, “s3:GetBucketLocation” ], “Resource”: [ “arn:aws:s3:::{{ManifestBucket}}/*” ] }, { “Effect”: “Allow”, “Action”: [ “s3:PutObject”, “s3:GetBucketLocation” ], “Resource”: [ “arn:aws:s3:::{{ReportBucket}}/*” ] } ]}

– PUT S3 Object Lock legal hold

{ “Version”: “2012-10-17”, “Statement”: [ { “Effect”: “Allow”, “Action”: “s3:GetBucketObjectLockConfiguration”, “Resource”: [ “arn:aws:s3:::{{TargetResource}}” ] }, { “Effect”: “Allow”, “Action”: “s3:PutObjectLegalHold”, “Resource”: [ “arn:aws:s3:::{{TargetResource}}/*” ] }, { “Effect”: “Allow”, “Action”: [ “s3:GetObject”, “s3:GetObjectVersion”, “s3:GetBucketLocation” ], “Resource”: [ “arn:aws:s3:::{{ManifestBucket}}/*” ] }, { “Effect”: “Allow”, “Action”: [ “s3:PutObject”, “s3:GetBucketLocation” ], “Resource”: [ “arn:aws:s3:::

{{ReportBucket}}/*” ] } ]}