This article provides a detailed overview of Elastic-Beanstalk Vs Ec2, also highlights few of the use-cases in general.

Elastic Beanstalk vs CloudFormation: Which one is Better?

AWS users will often ask themselves about the difference between Elastic Beanstalk & CloudFormation. This is because AWS offers its users with multiple options for provisioning IT infrastructure, app-deployment & management. AWS provides them with totally convenient & easy ways to setup along with lower levels of granular control.

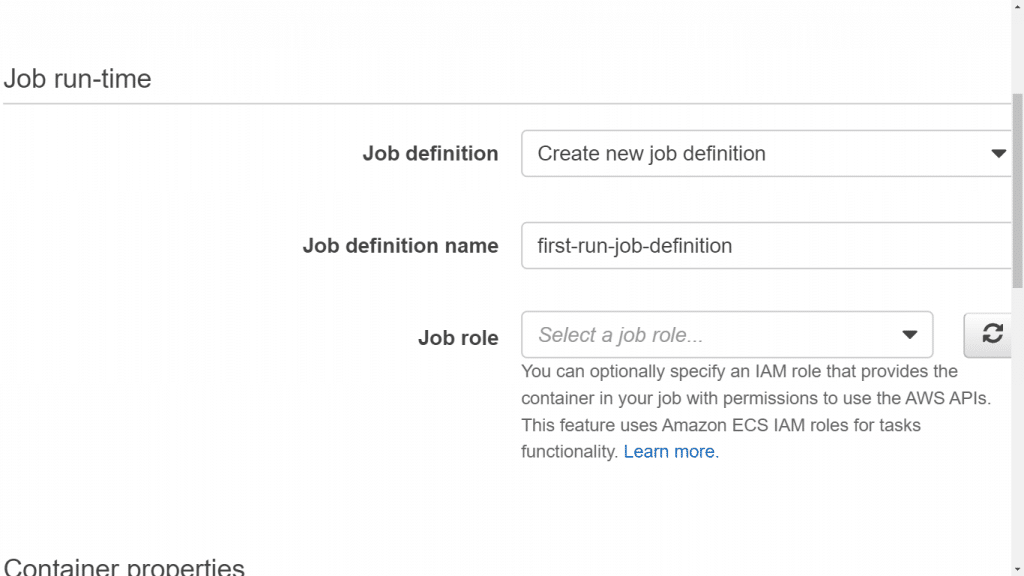

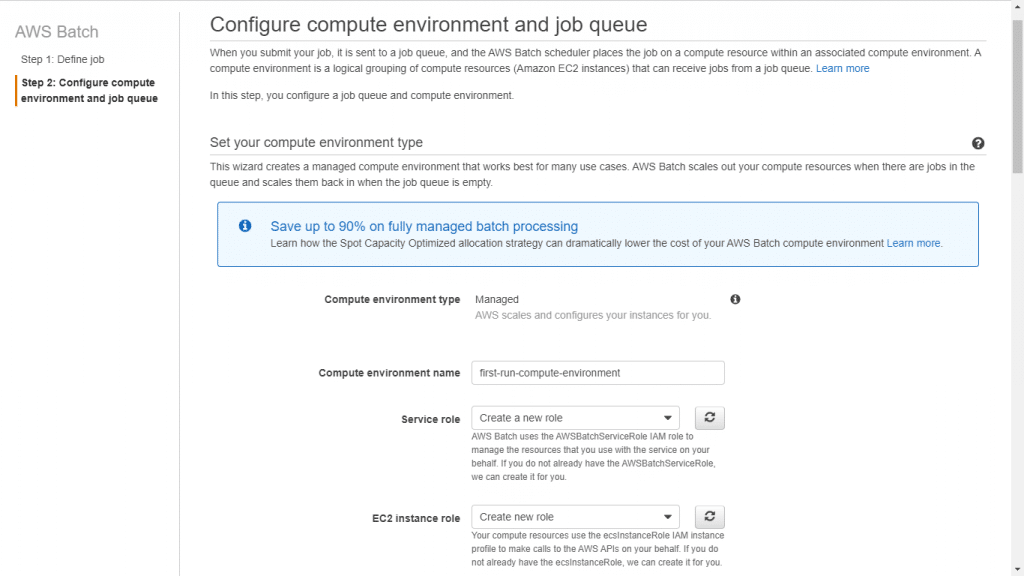

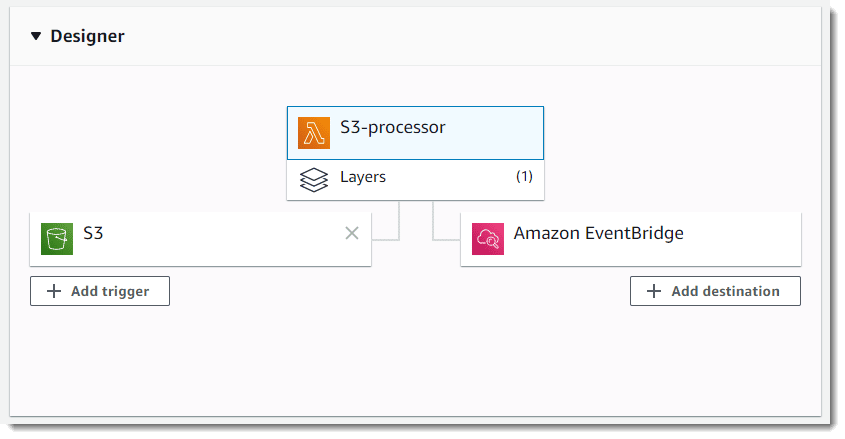

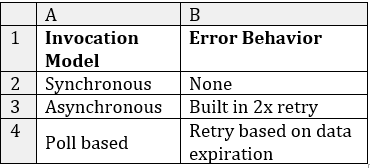

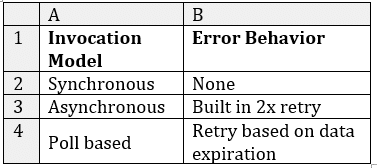

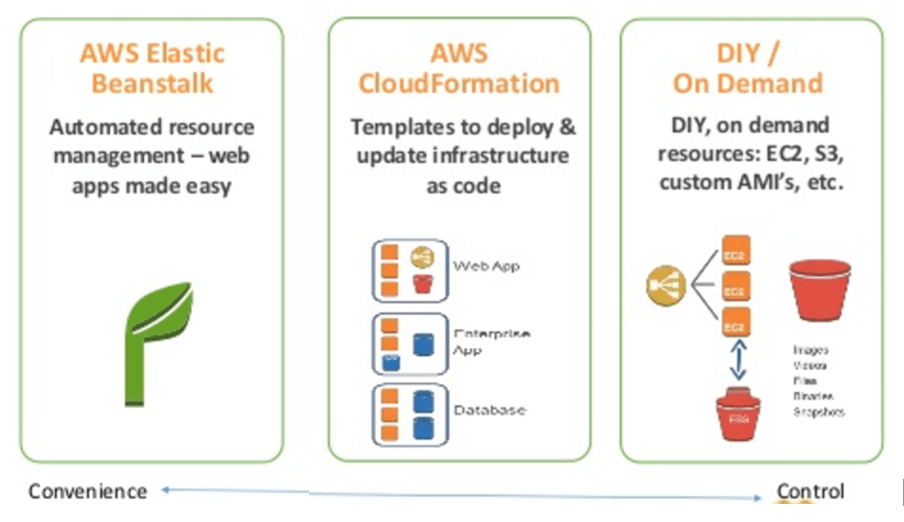

Ways of Deployment and Management of Elastic Beanstalk vs CloudFormation:

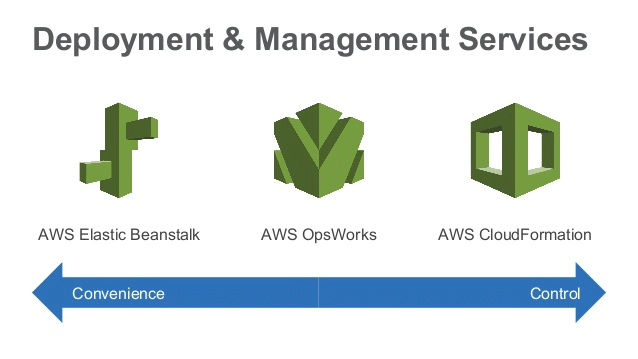

Elastic Beanstalk vs CloudFormation – Deployment and Management differences

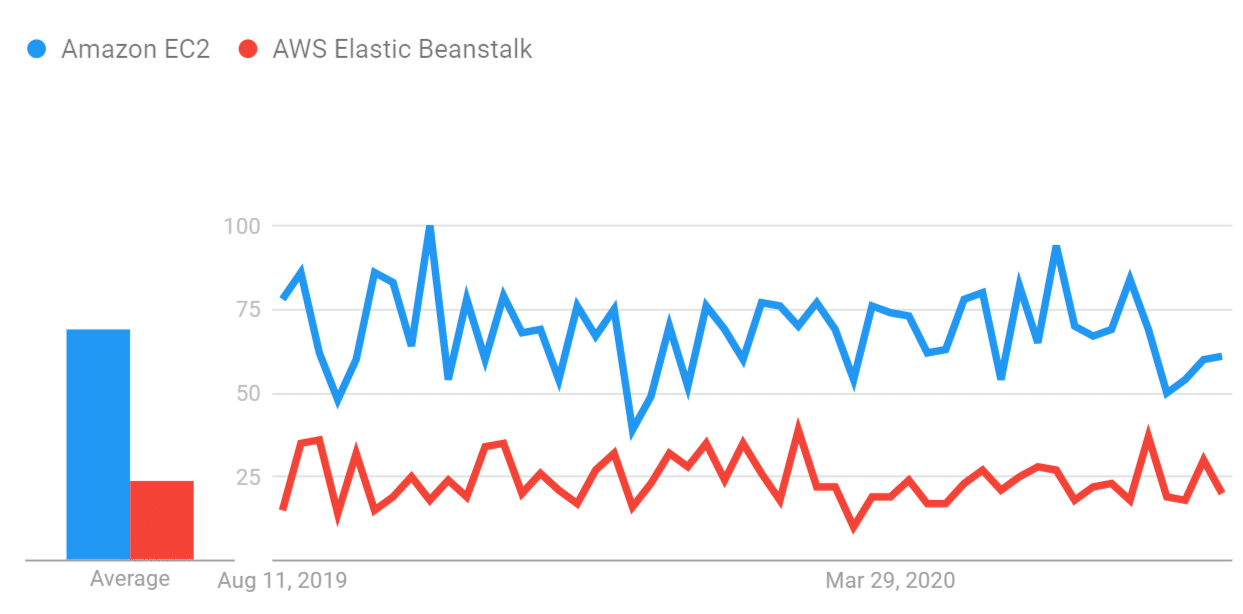

As it can be seen from above illustration, users who search for convenience find AWS Elastic Beanstalk as the perfect choice to go with. On the other hand, those who find both convenience & control equally important to them, will better go with using AWS CloudFormation instead.

What is AWS Elastic Beanstalk?

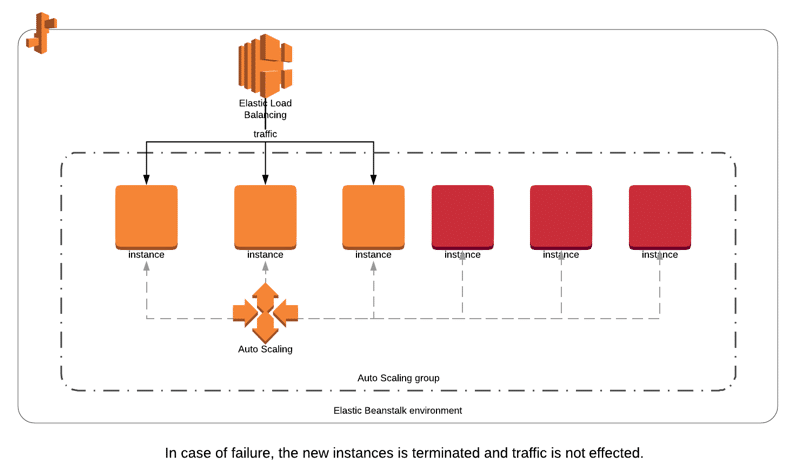

Elastic Beanstalk vs CloudFormation – How AWS Elastic Beanstalk Works

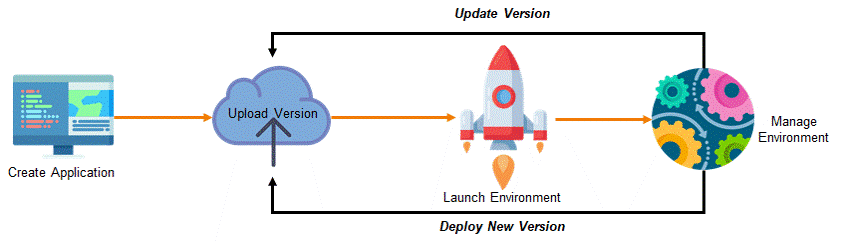

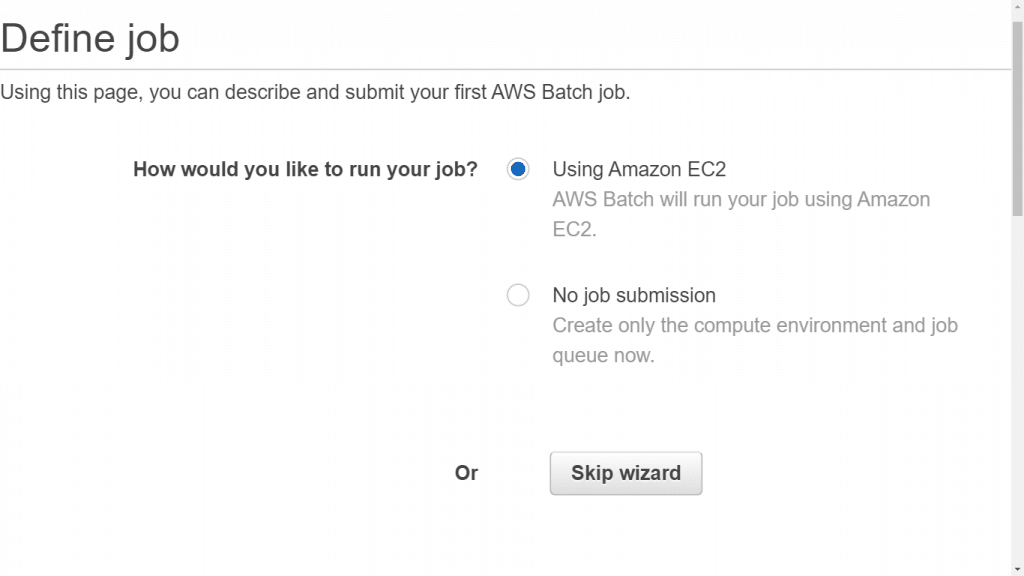

AWS Elastic Beanstalk is a fully-managed service to deploy, run and scale web applications and services developed in Java, .NET, PHP, Node.js, Python and Ruby and using a selective of servers such as Apache, Nginx and IIS.

Elastic Beanstalk handles the deployment, capacity provisioning, load balancing, scaling and application health monitoring. This allows developers to focus on developing the application withour considering infrastructure. Elastic Beanstalk enables Continuous Integration, Continuous Deployment with multiple deployment strategies such as blue/green deployments, rolling updates, and canary deployments.

- It can aid in the deployment, handling & monitoring of the scalability of your apps.

- Easy Integration with developer tools.

- Higher-level service with fast deployment & low management effort for a worker-based (or) web environment.

- Offers an environment for simple deployment and running of apps within AWS.

- Quick and easy for starting an app and then running it on the cloud.

- One-stop experience for app lifecycle management.

- Needs the least possible configuration changes.

- It does not require a lot of manual effort other than writing the app code & defining just a bit of configuration.

- The best choice for developers looking for deploying code without worrying about underlying infrastructure.

Head to Elastic Beanstalk console here.

What is AWS CloudFormation?

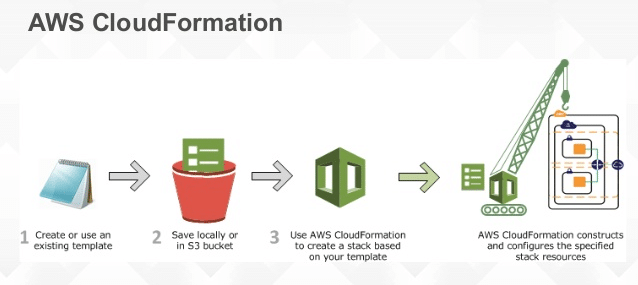

Elastic Beanstalk vs CloudFormation – How AWS CloudFormation Works

Amazon Web Services (AWS) CloudFormation is a Infrastructure as a Code service tool to manage and provision AWS resources as if writing code. CloudFormation allows you to create, update, and delete a collection of related AWS resources in a single and orchestrated operation. By having provisioning as a code, you can get rid of manual configuration as well as use versioning for any changes you made. Comparing with previous versions, reverting deployments and redo/replicate the operations will become very easy.

CloudFormation uses templates files, which are JSON or YAML formatted text files that constructs blueprints for AWS infrastructure and can be stored in S3 buckets or locally on your computer. With CloudFormation, a wide range of AWS resources like EC2 instances, elastic load balancers, and more can be defined easily. All of these resources in the template files are called the stack. All of the resources in the stack are created and managed as a single unit. This is essential to manage complex infrastructure deployments and to guarantee that all the resources are created and configured correctly.

If you need to modify existing AWS resources, you can update the template file and then CloudFormation can update the stack. So that you do not have to manually modify each individual resource.

Also CloudFormation provides a consistent way to manage your AWS resources across multiple accounts and regions. You can also easily move or replicate the stacks to another region or create different environments such as development, staging and production in your infrastructure without having to manually configure each environment.

- AWS Cloudformation provides provisioning, version-controlling & modeling of variety of AWS resources.

- Cloudformation also complements OpsWorks & Elastic Beanstalk as well.

- Low-level service provides perfect control over managing & provisioning your stacks of resources based on template.

- Templates allow for version control of your code’s infrastructure.

- Cloudformation simplifies the deployment of environments by using one template and updating variables.

- Cloudformation is capable of provisioning various types of AWS resources.

- Cloudformation also supports and can spin-up infrastructure requirements of diverse apps deployed on top of AWS.

Head to CloudFormation Console here.

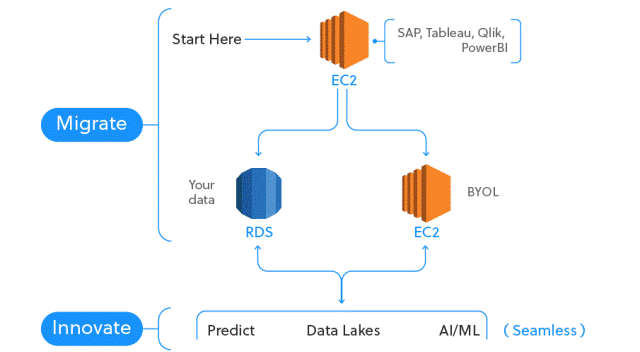

Using CloudFormation along with Elastic Beanstalk:

- Elastic Beanstalk application environments may be supported by CloudFormation while considering it a resource type.

- Hence you can get an Elastic Beanstalk–hosted app created & managed plus making an RDS database for the sake of storing the app data.

- Different supported resources may as well be used just like the RDS database.

What are the key differences b/w AWS Elastic Beanstalk & AWS CloudFormation?

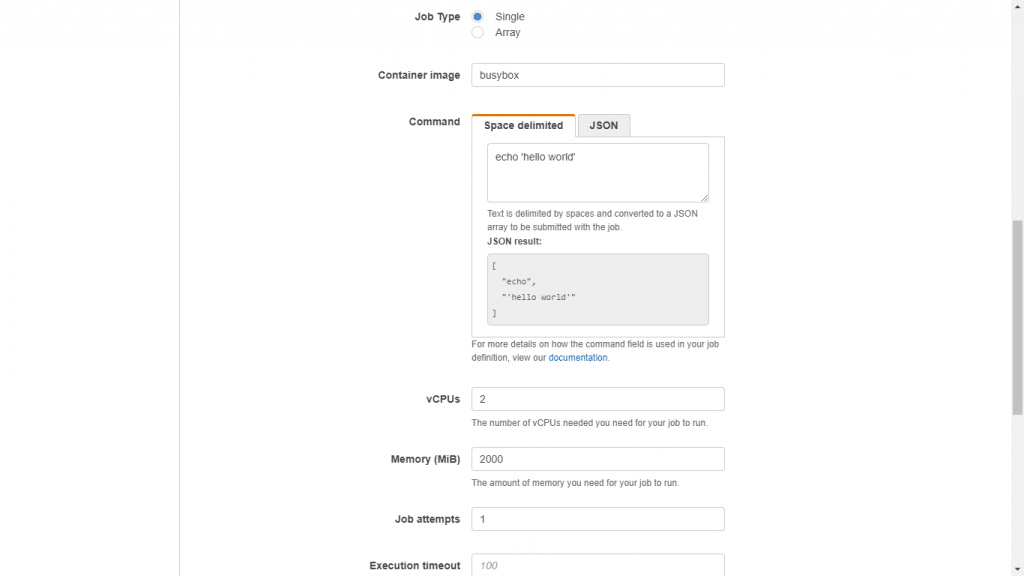

Elastic Beanstalk vs CloudFormation – Elastic Beanstalk vs CloudFormation In Control and Convenience

Elastic Beanstalk: For swiftly being able to get your apps deployed and managed. Higher-Level services and more convenience.

CloudFormation: For creating and managing a variety of close resources. You do things yourself while having more control.

Elastic Beanstalk Vs CloudFormation in Category:

AWS Elastic Beanstalk goes under “Platform as a Service” of tech stack, while AWS CloudFormation goes under the section of “Infrastructure Build Tools“.

Some of AWS Elastic Beanstalk’s most important features:

- Merely pay for the resources you require

- Created with known software stacks

- Fast and easy deployment method

Features provided by AWS CloudFormation:

- AWS CloudFormation comes with already created and complete sample templates

- Everything is out there in the field with no hidden discoveries, where templates come in easy to understand JSON text files.

- There is no need to make up something new or come up with a brilliant new idea because templates may always get utilized one time after the other for the sake of getting similar copies created of one particular stack. It is also possible to work with as a foundation for getting a new stack started right away.

Comparison of Elastic Beanstalk & CloudFormation:

Elastic Beanstalk Vs CloudFormation – Use Cases of Elastic Beanstalk Vs CloudFormation

| Service | What it Does | Advantages |

| AWS Elastic Beanstalk | Directly takes care of EC2, Auto Scaling and Elastic Load Balancing | Gives the developer the ability to manage only code and not systems |

| AWS CloudFormation | Uses JSON files for defining and launching cloud services like ELB, Auto Scaling and EC2 | Simplifies and makes everything easier for a Systems Engineer |

AWS Elastic Beanstalk is considered the simplest automation solution which provides users the capabilities for setting up somewhat complex deployment environments while not needing a lot of insights on AWS. When it comes to AWS CloudFormation, things will be more pressuring and complex to start with, but once you get some hands-on, they can be straightforward.

It’s best with Web endpoints that are somewhat complex in terms of the required services and systems, which ends up having a low number of web frontends that are going with RDS implementation.

Here are few awesome resources on AWS Services:

AWS Elastic Beanstalk Basics

AWS Elastic Beanstalk Pricing

AWS Elastic Beanstalk Vs EC2

AWS Elastic Beanstalk vs Lambda

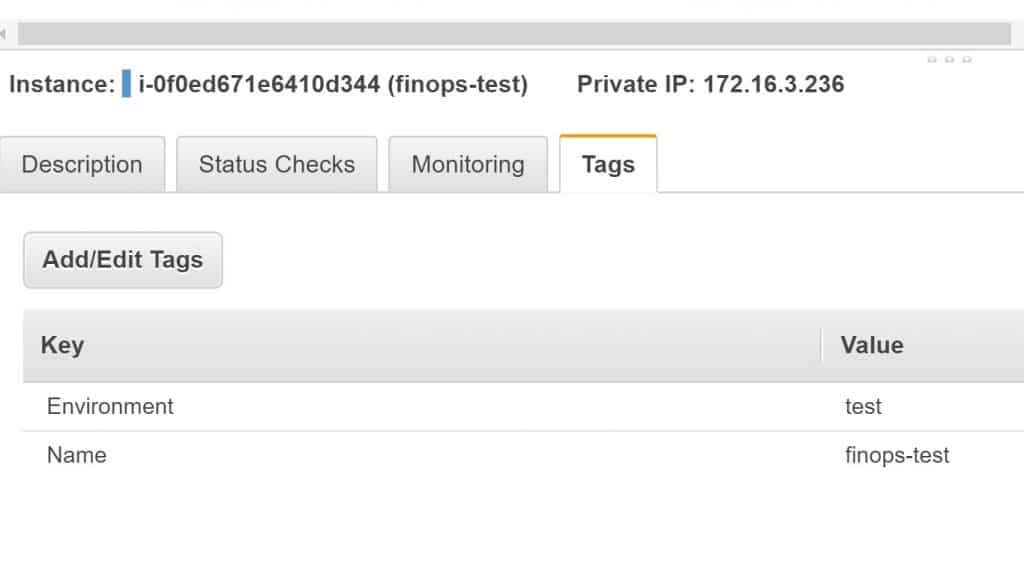

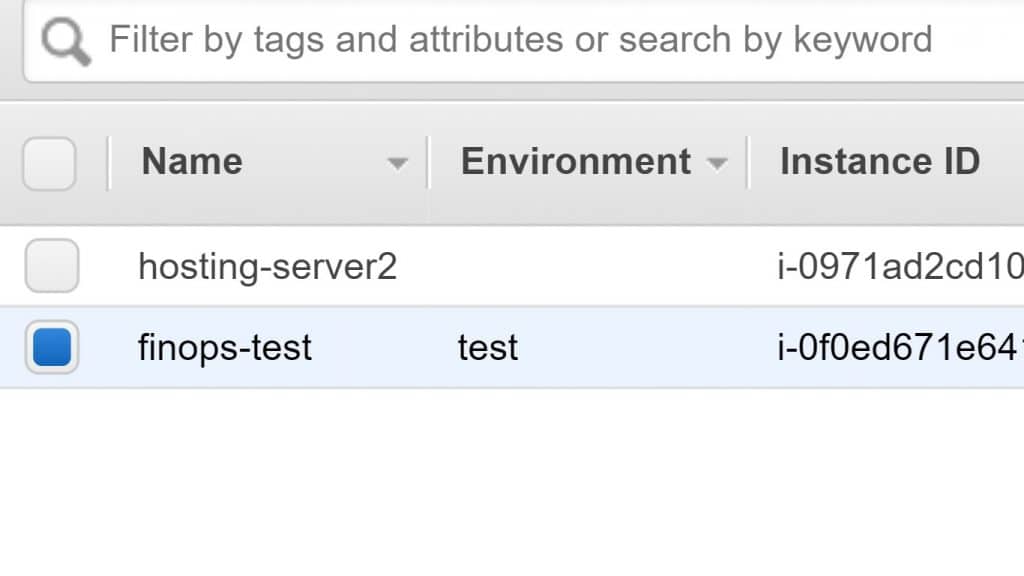

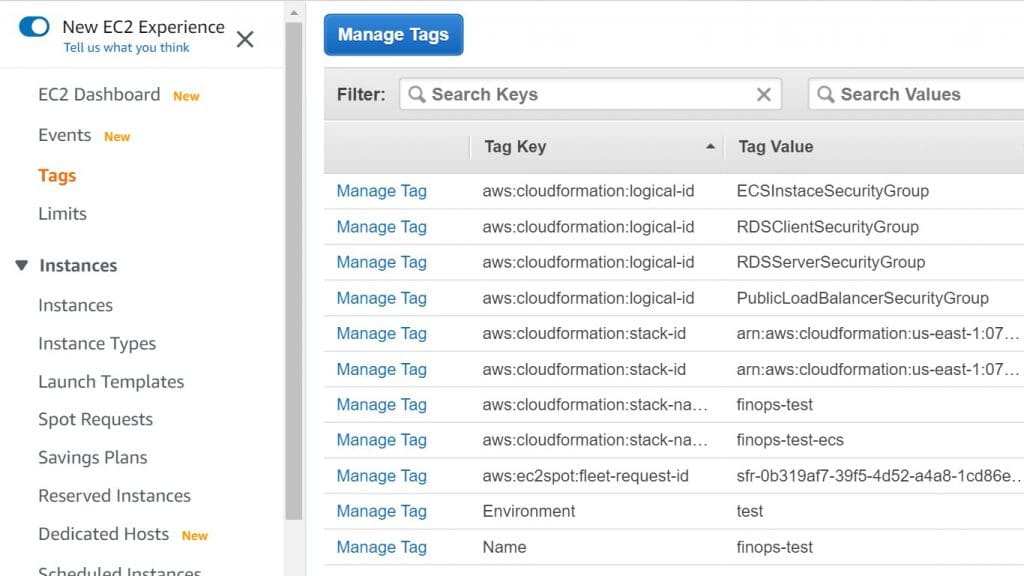

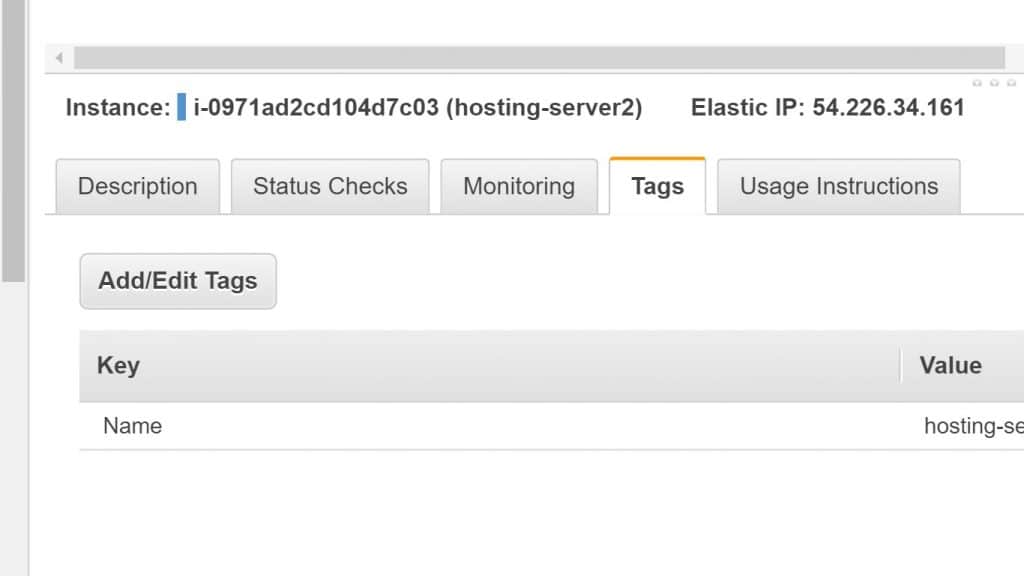

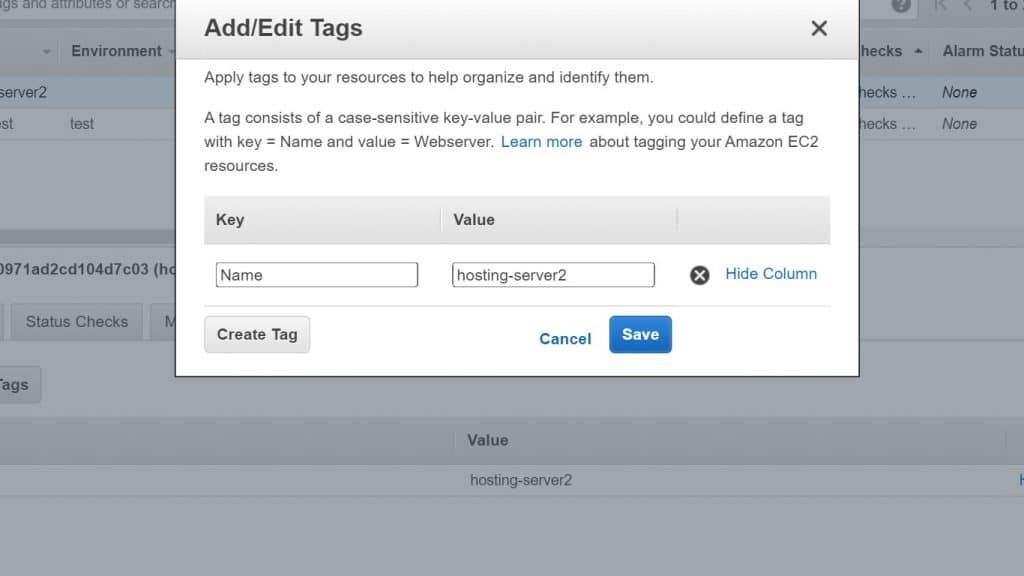

AWS EC2 Tagging

- CloudySave is an all-round one stop-shop for your organization & teams to reduce your AWS Cloud Costs by more than 55%.

- Cloudysave’s goal is to provide clear visibility about the spending and usage patterns to your Engineers and Ops teams.

- Have a quick look at CloudySave’s Cost Caluculator to estimate real-time AWS costs.

- Sign up Now and uncover instant savings opportunities.