Understanding the cloud pricing models

Cloud computing offers infrastructure and resources to organizations at a lower cost than traditional systems. Ideally, the cloud computing services are maintenance-free resources, helping to save efforts of the development and operational teams. These services are mainly classified as Iaas (Infrastructure as a service), PaaS (Platform as a service) and SaaS (Software as a service). However, customers need to use these resources and services as per workload and usage needs, otherwise, they might end up spending more.

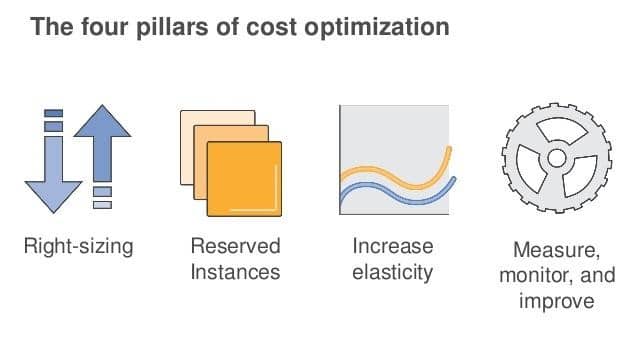

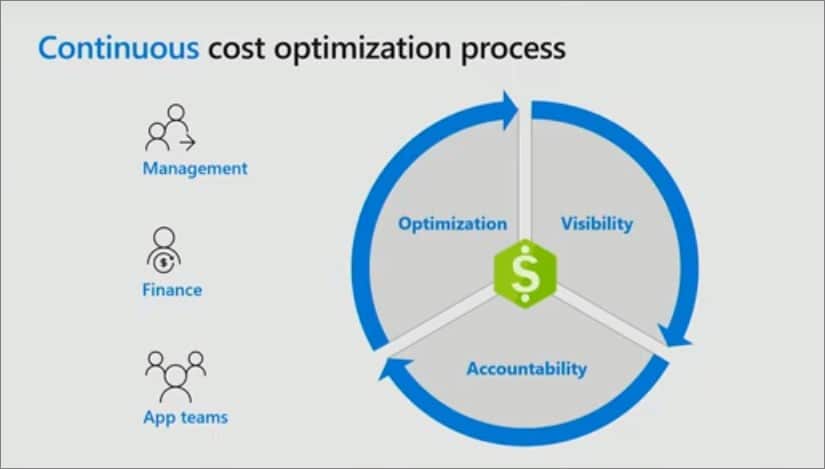

Choosing right-sizing resources and required services is not enough, for cost reduction both – IT and engineer teams need to work on the same level. It’s been observed that IT teams have more knowledge about the internal server processes, storage, and load balancing, whereas engineers are left with just integration to the cloud services into their respective projects. The communication gap management, finance, and developers often leads to an increase in the overall budget.

Understanding the cloud pricing models

If engineering teams are informed about the cost management of the service model and associated component-vice drill down, the chances of over-budgeting and project ramp-downs would reduce.

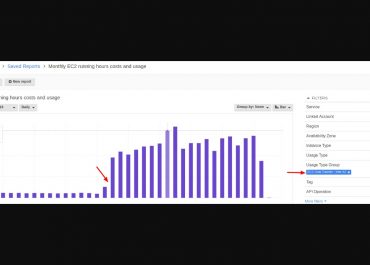

Cloud cost management means finding cost-effective ways to ultimately maximize cloud usage and efficiency. It’s organizational planning which allows enterprises to understand, choose the cloud technology models, evaluate and manage the cost associated with the deployment of an application. The on-demand model might appear to be the simple model that provides flexibility to increase or reduce the resources based on the business requirements. In fact, without proper knowledge, skill set, design, and planning, the cloud infrastructure can result in messy and complex models that are difficult to track and maintain. Integration, utilization, and maintenance of cloud technology is not the job of one team. Engineering teams should be aware of the pricing models of cloud services which will result in significant savings, appropriate management, and better decision making. A cloud cost management strategy can help to control the costs as it depends upon obvious deriving factors like Storage, Network Traffic, Web services, VM instances, licenses, subscription, and training and support, etc.

The engineering teams and other departments must understand the two types of cloud pricing models used on the cloud – fixed and dynamic.

Fixed Pricing Models

It is also known as static pricing as the price is stable for a long period. This model is further categorized as:

- Pay-per-use – The users only have to pay for what they use. This can be dependent on the time frame or quantity being consumed on a specific service.

- Subscription – The users pay repeatedly to access the elements of a service. This gives flexibility to customers to subscribe to a specific combination of pre-selected service units for a fixed and longer frame of time like monthly and yearly.

- Hybrid – This combines the features of the above to models. The prices are set based on the subscription model while the use is limited to the pay-per-use model.

Dynamic Pricing Model

It is also known as real-time pricing. This model is more flexible and can vary based on parameters like cost, time, and location, etc. The cost is calculated whenever a request for the service is made. The service providers keep changing the price lists regularly and the customers can utilize it to gain larger profit gains as compared to fixed pricing models. The cost of the project can vary based on several factors like competitor price, prices set based on the location of the customer, or the distance between the service center to the service being used.

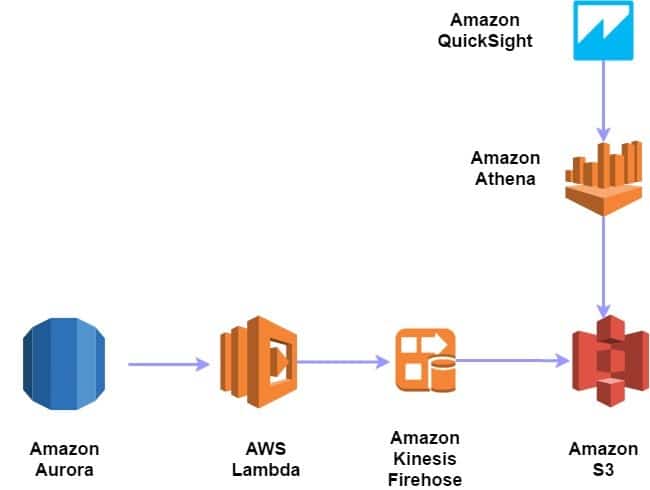

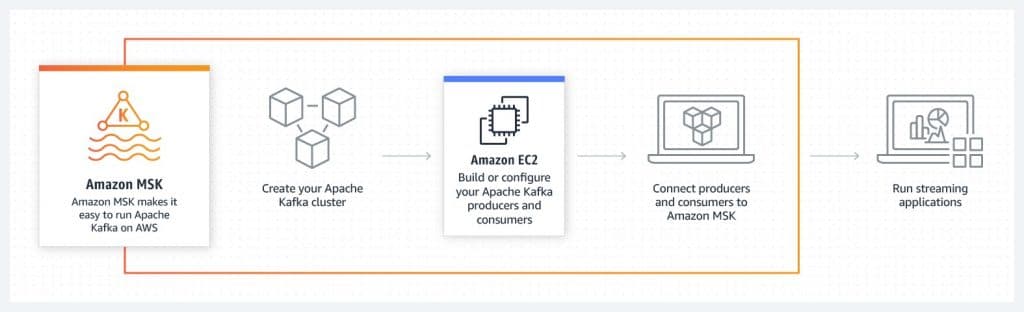

The latest is dynamic pricing models like Serverless computing, Database services, or Analytic services which are attracting the companies.

As the consumer of cloud services, the companies expect the highest level of Quality Of Service (QoS) available at a reasonable price. While the cloud computing provider typically focuses on maximizing their revenues by different pricing schemes for the services. So for both parties, cost plays a vital role. It becomes even more important for the customer to understand the cost accounting of the services for which they are paying. Holistically, cloud computing pricing has two aspects.

- Firstly, it is related to the resource-consumption, system design, configuration, and optimization, etc.

- Secondly, pricing based on quality, maintenance, depreciation, and other economic factors.

Below are the several categories on which the pricing calculation is based on. Teams should work in collaboration and perform cost accounting checks at each step.

- Time-based: Pricing is based on how long service is being used.

- Volume-based: Pricing is based on the capacity of a metric.

- Priority pricing: Services are labelled and priced according to their priority.

- Responsive pricing: Charging is activated only on service congestion.

- Session-oriented: Pricing is based on the use given to the session.

- Usage-based: Pricing is based on the general use of the service for a period, e.g. weekly, monthly.