In very simple terms a hybrid cloud strategy is essentially a strategy for deciding what workload should go where. Like many simple concepts, however, turning the theory into practice can require a lot of serious thought and coming up with answers to a number of questions.

A hybrid cloud strategy is usually based on the answers to four key questions

The four questions which usually set the foundations for a hybrid cloud strategy are:

What are the regulatory requirements?

What are the management implications?

What does this mean for application performance?

What is the total cost of ownership?

As can be clearly seen, these questions are very broad, which means that answering them usually depends on answering a number of other, more detailed questions, only some of which are likely to have yes/no answers. You may therefore need to develop a weighting/scoring strategy for assessing priorities.

Pro tip: it’s very risky to assume that everyone will automatically understand what something means. What’s obvious to you may or may not be obvious to them. That being so, it’s generally recommended to assign definitions to any significant terms and, obviously, make sure that those definitions are easily accessible to anyone who needs them.

What are the regulatory requirements?

This should be the first question you ask when analyzing any situation because doing so will stop you “falling in love” with a potential solution and spending a whole lot of time and effort putting together a plan to implement it only to discover later that it’s a non-starter with the regulators.

In this context, it has to be said that laws are not necessarily as clear as people would prefer, which means that sometimes you may need to get specific guidance from lawyers or even go to the regulator directly. Basically, you need to do whatever it takes to make sure you stay on the right side of the law.

For completeness, keeping regulators happy does not necessarily mean you have to use on-premises data centers, in fact it doesn’t even necessarily mean that you will be restricted to using a private cloud. It may, for example, simply mean that you need to ensure that your cloud provider’s data centers are based in a geographical area the regulator considers acceptable. You will only know if you check.

It’s also worth noting that security in general does not depend on location (although that’s obviously a factor). Public clouds can be more secure than poorly-managed private clouds, but they can also be a playground for cybercriminals. On-premises data centers should be the most secure form of IT infrastructure there is, but only if the company running it knows what it’s doing. In other words, choose your cloud provider(s) with care and ensure that any in-house IT systems are appropriately resourced.

What are the management implications?

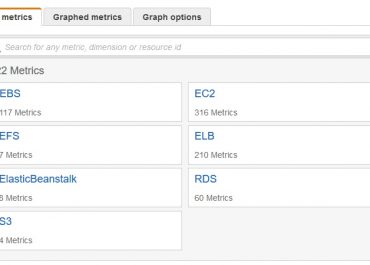

In this context, the term “management implications” has two meanings. First of all, there is the direct meaning of how you will manage your cloud platforms in general and your data and applications in particular. This in itself will have different meanings depending on the specific nature of your hybrid cloud deployment, but as a minimum, you can expect cost optimization to be a factor in your considerations.

The second is wider meaning of how you will manage your hybrid cloud environment to support your business as a whole. For example, where do you expect to see growth and where do you expect to see contraction? What new areas would you like to develop and are there any old areas you would like to drop?

What does this mean for application performance?

It may seem strange that this question comes so low on the list, but a lot of the time it’s a fairly minor issue, in fact it may even be a non-issue. If an application is mission critical and needs to work at top speed, then on-premises data centers and/or the private cloud is the way to go, assuming you have the capability to manage them, otherwise, it may be best to accept the slight latency which usually goes along with the public clouds.

Otherwise, the public cloud is usually absolutely fine from the perspective of application performance, at least it is if you choose a decent public cloud provider. In particular, you’ll want clear and enforceable SLAs in place along with information about their past track record of maintaining uptime.

What is the total cost of ownership?

The public cloud will almost inevitably come out the winner here for all kinds of reasons, at least, it will assuming that you perform cost optimization in an effective manner. It will also give you the benefit of being able to eliminate resource-draining capital expenditure (in a depreciating asset) and exchange it for regular monthly payments which can be much easier to manage.