How AWS S3 Pricing Model Works?

S3 Pricing: Most Cost relies on “Storage” and “Transfer”

| Size | Cost | Storage | Transfers | Requests |

|---|---|---|---|---|

| Low | 0.15 | 10GB | 1GB | 5300 |

| Medium | 2.87 | 100GB | 10GB | 53000 |

| High | 39.63 | 1TB | 100GB | 5030000 |

- Low Usage Example

Basic storage configuration, where cost to store on S3 is minimal.

To store around 10GB of data or less without downloading, S3 will be of benefit and only charge pennies per month.

Even with 5,300 requests, four times that amount should be performed before even spending a dollar on web requests.

- Medium Usage Example

Average or medium-sized storage configuration.

S3 pricing is monthly, so it’s about 30 days to perform 50,000 object GET Requests for about 1,667 a day.

100GB of storage and 10GB of data transfers are large quantities for hosting images, text or audio.

You shall only face a problem when using S3 to serve up static content for your famous website.

- High Usage Example

Even with extreme storage requirements cost is not a problem.

High storage volume shows that a terabyte of data can be stored, and 100GB of data transferred, for less than forty bucks monthly.

Even in the case of having five million requests it’ll be over 150,000 daily.

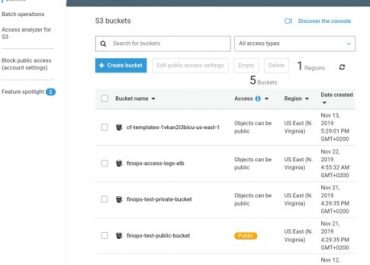

What are S3 Pricing Factors?

- Storage Amount: Total amount of data stored.

- Amount of Outbound Data Transferred: Charging for each downloaded file.

- Number of Requests: Charging for every request being made.

First Cost Factor (Storage)

It is based on total size of objects stored in S3 buckets.

Pricing is mainly $0.03/gigabyte.

Second Cost Factor (Outbound Data)

Charges are based on the amount of data transferred from S3 to the Internet, which is called Data Transfer Out, along with data transferred between Regions, which is called Inter-Region Data Transfer Out.

Pricing is mainly $0.09/gigabyte, until it reaches its first terabyte where pricing starts to slightly decrease.

Third Cost Factor (Requests)

GET and PUT Requests are the main agents for the cost of web requests.

They cost around $0.005/1,000 requests.

They’re a factor for cost that you wouldn’t have to worry about.

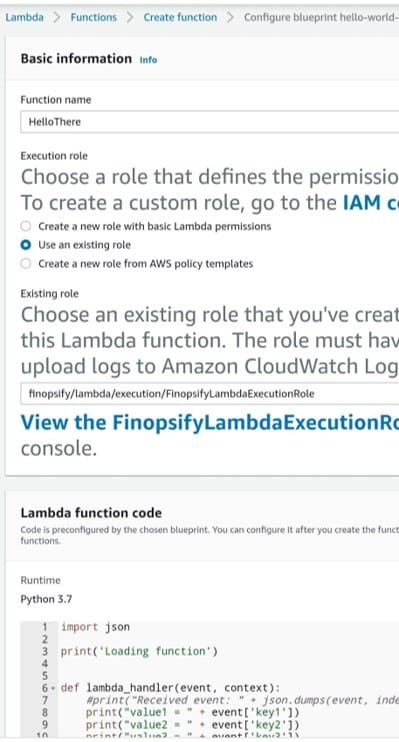

- What is Amazon’s S3 Calculator?

Users can create their own storage configuration and get their monthly estimate accordingly using AWS S3 Calculator. Check it yourself by heading to this link: https://calculator.s3.amazonaws.com/index.html

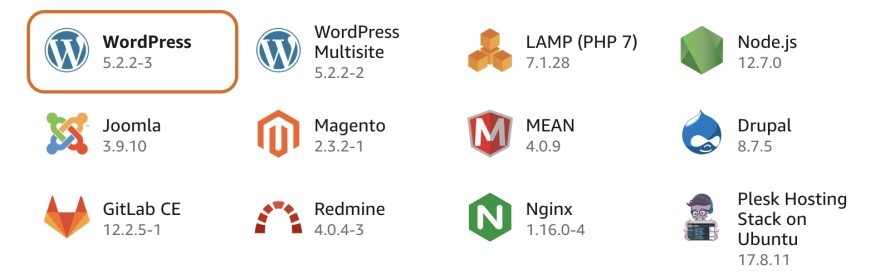

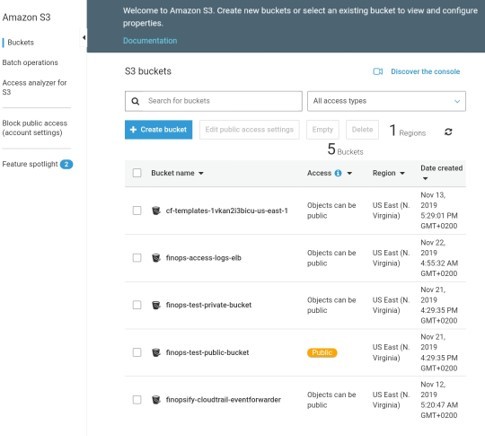

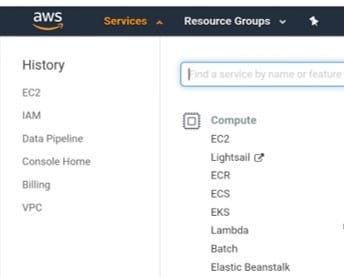

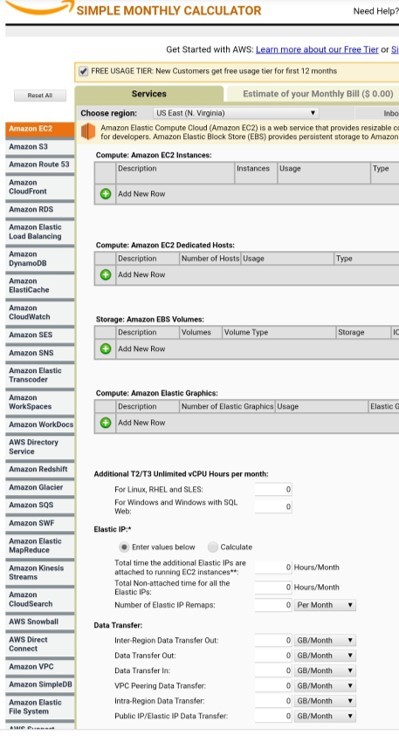

- Services Configuration

AWS S3 Pricing Model

Amazon’s web services will be displayed on the left at the Services Configuration Tab. To focus on the S3 service, choose that option and configure it as required.

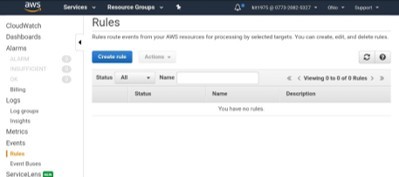

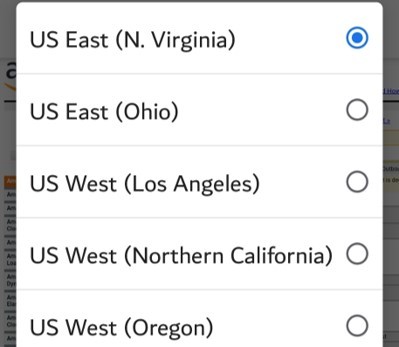

- Region Configuration

AWS S3 Pricing Model

Various AWS regions can be configured for each service, with specific configuration using the Region Configuration Option. Choose the regions that are closest to your customers.

To choose a region, we can simply go to the drop-down menu and then go configure the S3 storage options for that chosen location.

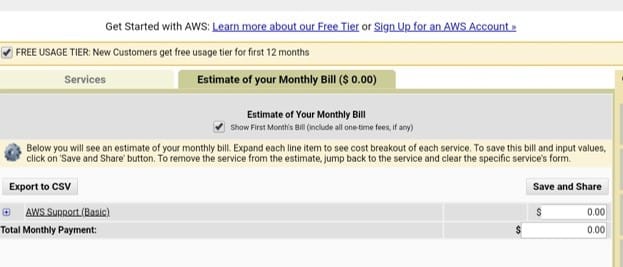

- Monthly Bill Estimate

AWS S3 Pricing Model

In order to check monthly billing estimate, click Estimate of your Monthly Bill tab. Click on Save and Share button to get a unique URL of the estimate created.